Cylc documentation¶

The Cylc Suite Engine

Current release: 7.9.3

Released Under the GNU GPL v3.0 Software License

Copyright (C) NIWA & British Crown (Met Office) & Contributors.

Cylc (“silk”) is a workflow engine for cycling systems - it orchestrates distributed suites of interdependent cycling tasks that may continue to run indefinitely.

1. Introduction: How Cylc Works¶

This section of the user guide is being rewritten for Cylc 8. For the moment we’ve removed some outdated information, leaving just the description of how Cylc manages cycling workflows. For a more up-to-date description see references cited on the Cylc web site.

1.1. Dependence Between Tasks¶

1.1.1. Intra-cycle Dependence¶

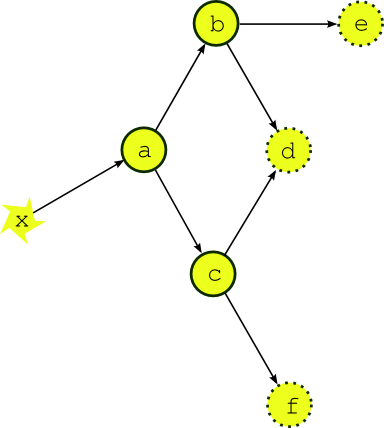

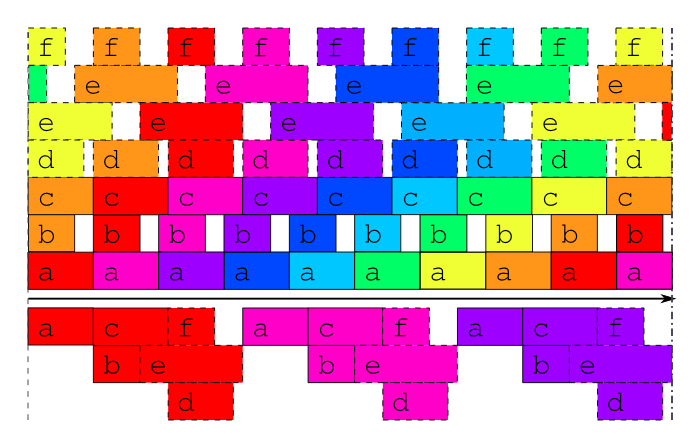

Most dependence between tasks applies within a single cycle point. Fig. 1 shows the dependency diagram for a single cycle point of a simple example suite of three scientific models (say) (a, b, and c) and three post processing or product generation tasks (d, e and f). A scheduler capable of handling this must manage, within a single cycle point, multiple parallel streams of execution that branch when one task generates output for several downstream tasks, and merge when one task takes input from several upstream tasks.

Fig. 1 A single cycle point dependency graph for a simple suite. The dependency graph for a single cycle point of a simple example suite. Tasks a, b, and c represent models, d, e and f are post processing or product generation tasks, and x represents external data that the upstream model depends on.

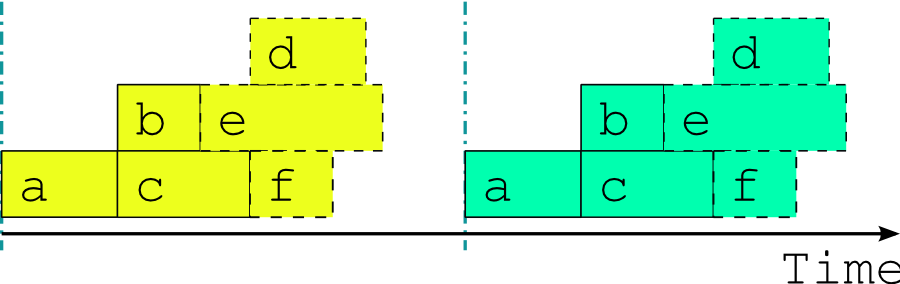

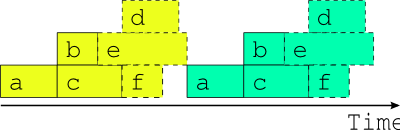

Fig. 2 A single cycle point job schedule for real time operation. The optimal job schedule for two consecutive cycle points of our example suite during real time operation, assuming that all tasks trigger off upstream tasks finishing completely. The horizontal extent of a task bar represents its execution time, and the vertical blue lines show when the external driving data becomes available.

Fig. 2 shows the optimal job schedule for two consecutive cycle points of the example suite in real time operation, given execution times represented by the horizontal extent of the task bars. There is a time gap between cycle points as the suite waits on new external driving data. Each task in the example suite happens to trigger off upstream tasks finishing, rather than off any intermediate output or event; this is merely a simplification that makes for clearer diagrams.

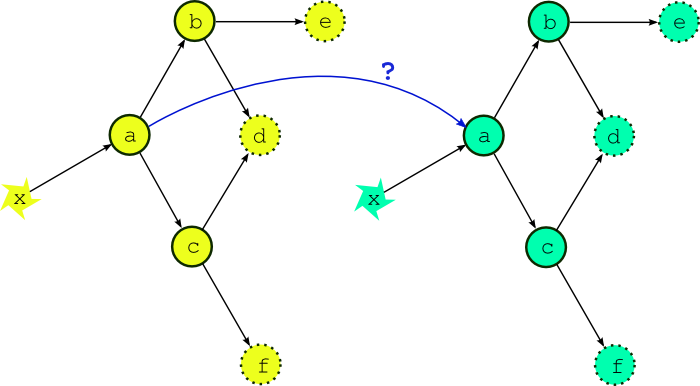

Fig. 3 What if the external driving data is available early? If the external driving data is available in advance, can we start running the next cycle point early?

Fig. 4 Attempted overlap of consecutive single-cycle-point job schedules. A naive attempt to overlap two consecutive cycle points using the single-cycle-point dependency graph. The red shaded tasks will fail because of dependency violations (or will not be able to run because of upstream dependency violations).

Fig. 5 The only safe multi-cycle-point job schedule? The best that can be done in general when inter-cycle dependence is ignored.

Now the question arises, what happens if the external driving data for upcoming cycle points is available in advance, as it would be after a significant delay in operations, or when running a historical case study? While the model a appears to depend only on the external data x, in fact it could also depend on its own previous instance for the model background state used in initializing the new run (this is almost always the case for atmospheric models used in weather forecasting). Thus, as alluded to in Fig. 3, task a could in principle start as soon as its predecessor has finished. Fig. 4 shows, however, that starting a whole new cycle point at this point is dangerous - it results in dependency violations in half of the tasks in the example suite. In fact the situation could be even worse than this - imagine that task b in the first cycle point is delayed for some reason after the second cycle point has been launched. Clearly we must consider handling inter-cycle dependence explicitly or else agree not to start the next cycle point early, as is illustrated in Fig. 5.

1.1.2. Inter-Cycle Dependence¶

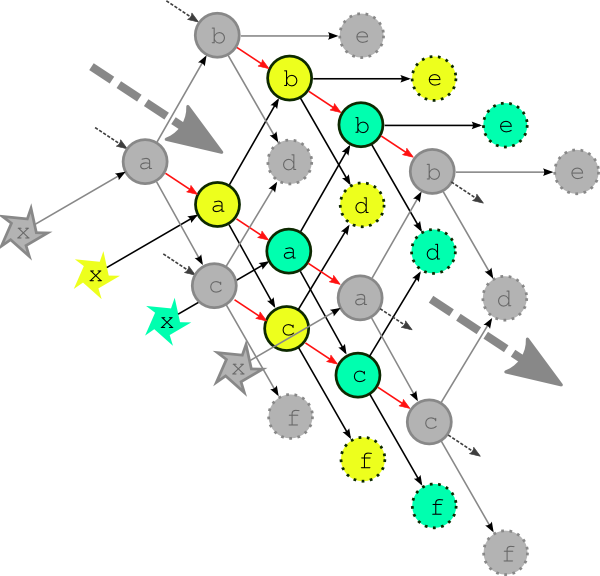

Wether forecast models typically depend on their own most recent previous forecast for background state or restart files of some kind (this is called warm cycling) but there can also be inter-cycle dependence between other tasks. In an atmospheric forecast analysis suite, for instance, the weather model may generate background states for observation processing and data-assimilation tasks in the next cycle point as well as for the next forecast model run. In real time operation inter-cycle dependence can be ignored because it is automatically satisfied when one cycle point finishes before the next begins. If it is not ignored it drastically complicates the dependency graph by blurring the clean boundary between cycle points. Fig. 6 illustrates the problem for our simple example suite assuming minimal inter-cycle dependence: the warm cycled models (a, b, and c) each depend on their own previous instances.

For this reason, and because we tend to see forecasting suites in terms of their real time characteristics, other metaschedulers have ignored inter-cycle dependence and are thus restricted to running entire cycle points in sequence at all times. This does not affect normal real time operation but it can be a serious impediment when advance availability of external driving data makes it possible, in principle, to run some tasks from upcoming cycle points before the current cycle point is finished - as was suggested at the end of the previous section. This can occur, for instance, after operational delays (late arrival of external data, system maintenance, etc.) and to an even greater extent in historical case studies and parallel test suites started behind a real time operation. It can be a serious problem for suites that have little downtime between forecast cycle points and therefore take many cycle points to catch up after a delay. Without taking account of inter-cycle dependence, the best that can be done, in general, is to reduce the gap between cycle points to zero as shown in Fig. 5. A limited crude overlap of the single cycle point job schedule may be possible for specific task sets but the allowable overlap may change if new tasks are added, and it is still dangerous: it amounts to running different parts of a dependent system as if they were not dependent and as such it cannot be guaranteed that some unforeseen delay in one cycle point, after the next cycle point has begun, (e.g. due to resource contention or task failures) won’t result in dependency violations.

Fig. 6 The complete multi-cycle-point dependency graph. The complete dependency graph for the example suite, assuming the least possible inter-cycle dependence: the models (a, b, and c) depend on their own previous instances. The dashed arrows show connections to previous and subsequent cycle points.

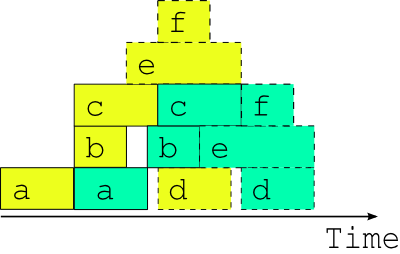

Fig. 7 The optimal two-cycle-point job schedule. The optimal two cycle job schedule when the next cycle’s driving data is available in advance, possible in principle when inter-cycle dependence is handled explicitly.

Fig. 7 shows, in contrast to Fig. 4, the optimal two cycle point job schedule obtained by respecting all inter-cycle dependence. This assumes no delays due to resource contention or otherwise - i.e. every task runs as soon as it is ready to run. The scheduler running this suite must be able to adapt dynamically to external conditions that impact on multi-cycle-point scheduling in the presence of inter-cycle dependence or else, again, risk bringing the system down with dependency violations.

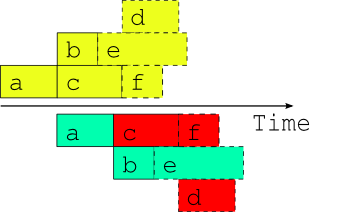

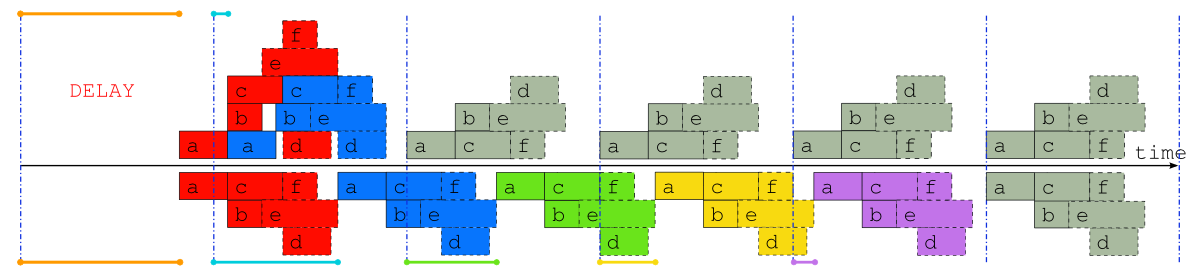

Fig. 8 Comparison of job schedules after a delay. Job schedules for the example suite after a delay of almost one whole cycle point, when inter-cycle dependence is taken into account (above the time axis), and when it is not (below the time axis). The colored lines indicate the time that each cycle point is delayed, and normal “caught up” cycle points are shaded gray.

Fig. 9 Optimal job schedule when all external data is available. Job schedules for the example suite in case study mode, or after a long delay, when the external driving data are available many cycle points in advance. Above the time axis is the optimal schedule obtained when the suite is constrained only by its true dependencies, as in Fig. 3, and underneath is the best that can be done, in general, when inter-cycle dependence is ignored.

To further illustrate the potential benefits of proper inter-cycle dependency handling, Fig. 8 shows an operational delay of almost one whole cycle point in a suite with little downtime between cycle points. Above the time axis is the optimal schedule that is possible in principle when inter-cycle dependence is taken into account, and below it is the only safe schedule possible in general when it is ignored. In the former case, even the cycle point immediately after the delay is hardly affected, and subsequent cycle points are all on time, whilst in the latter case it takes five full cycle points to catch up to normal real time operation [1].

Similarly, Fig. 9 shows example suite job schedules for an historical case study, or when catching up after a very long delay; i.e. when the external driving data are available many cycle points in advance. Task a, which as the most upstream model is likely to be a resource intensive atmosphere or ocean model, has no upstream dependence on co-temporal tasks and can therefore run continuously, regardless of how much downstream processing is yet to be completed in its own, or any previous, cycle point (actually, task a does depend on co-temporal task x which waits on the external driving data, but that returns immediately when the data is available in advance, so the result stands). The other models can also cycle continuously or with a short gap between, and some post processing tasks, which have no previous-instance dependence, can run continuously or even overlap (e.g. e in this case). Thus, even for this very simple example suite, tasks from three or four different cycle points can in principle run simultaneously at any given time.

In fact, if our tasks are able to trigger off internal outputs of upstream tasks (message triggers) rather than waiting on full completion, then successive instances of the models could overlap as well (because model restart outputs are generally completed early in the run) for an even more efficient job schedule.

| [1] | Note that simply overlapping the single cycle point schedules of Fig. 2 from the same start point would have resulted in dependency violation by task c. of task proxies in the pool. |

2. Cylc Screenshots¶

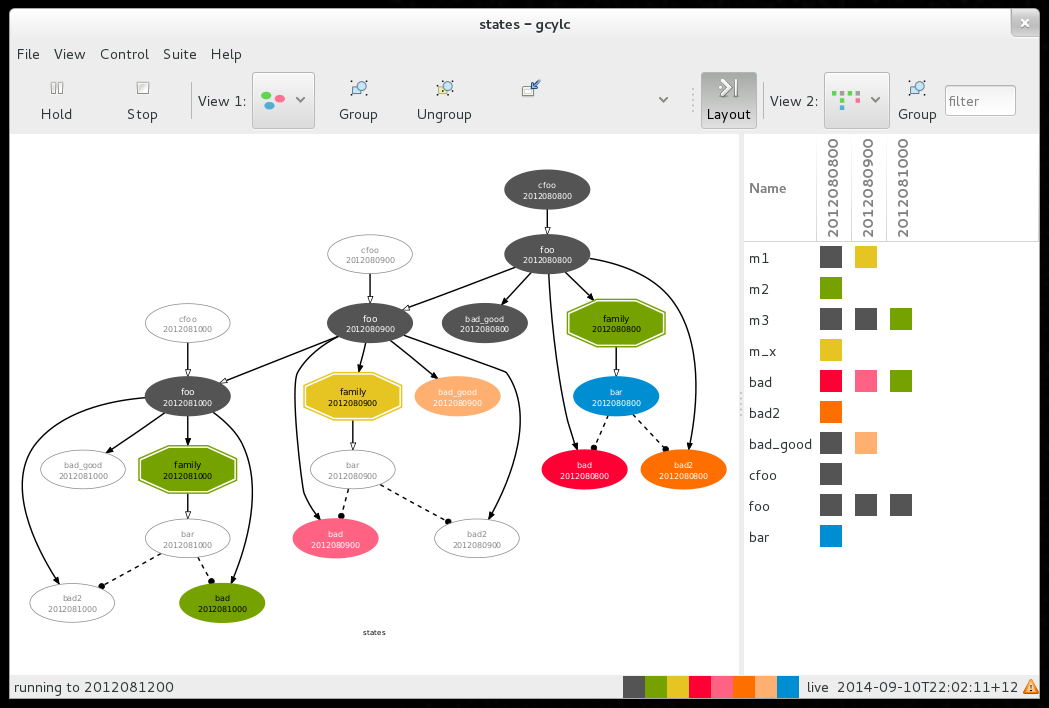

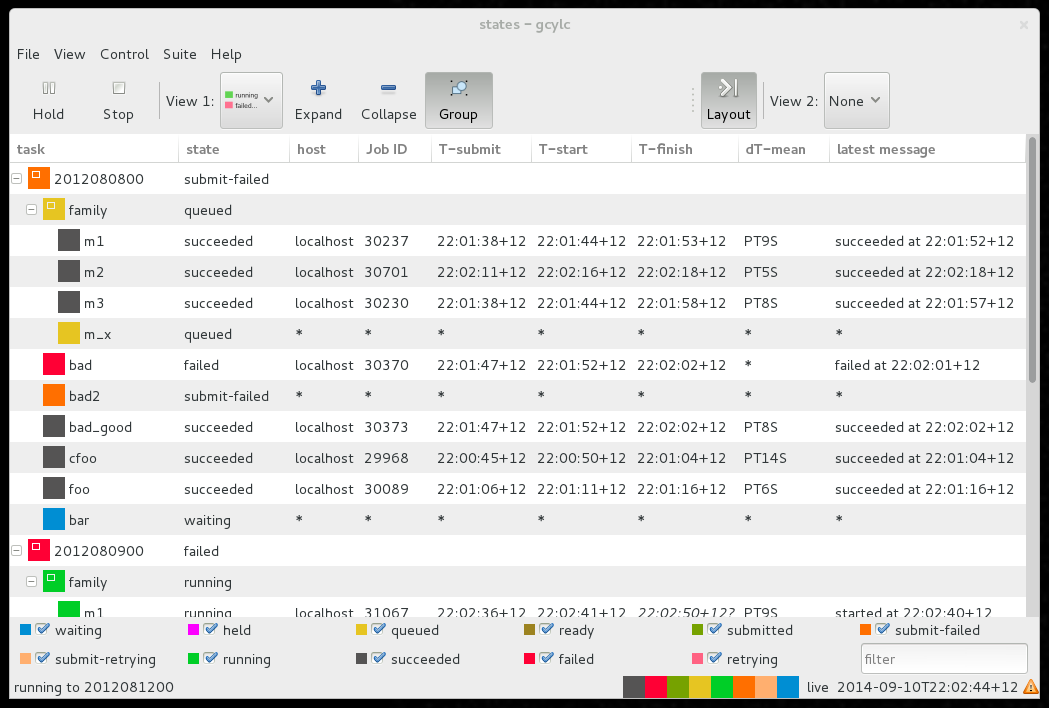

Fig. 10 gcylc graph and dot views.

Fig. 11 gcylc text view.

Fig. 12 gscan multi-suite state summary GUI.

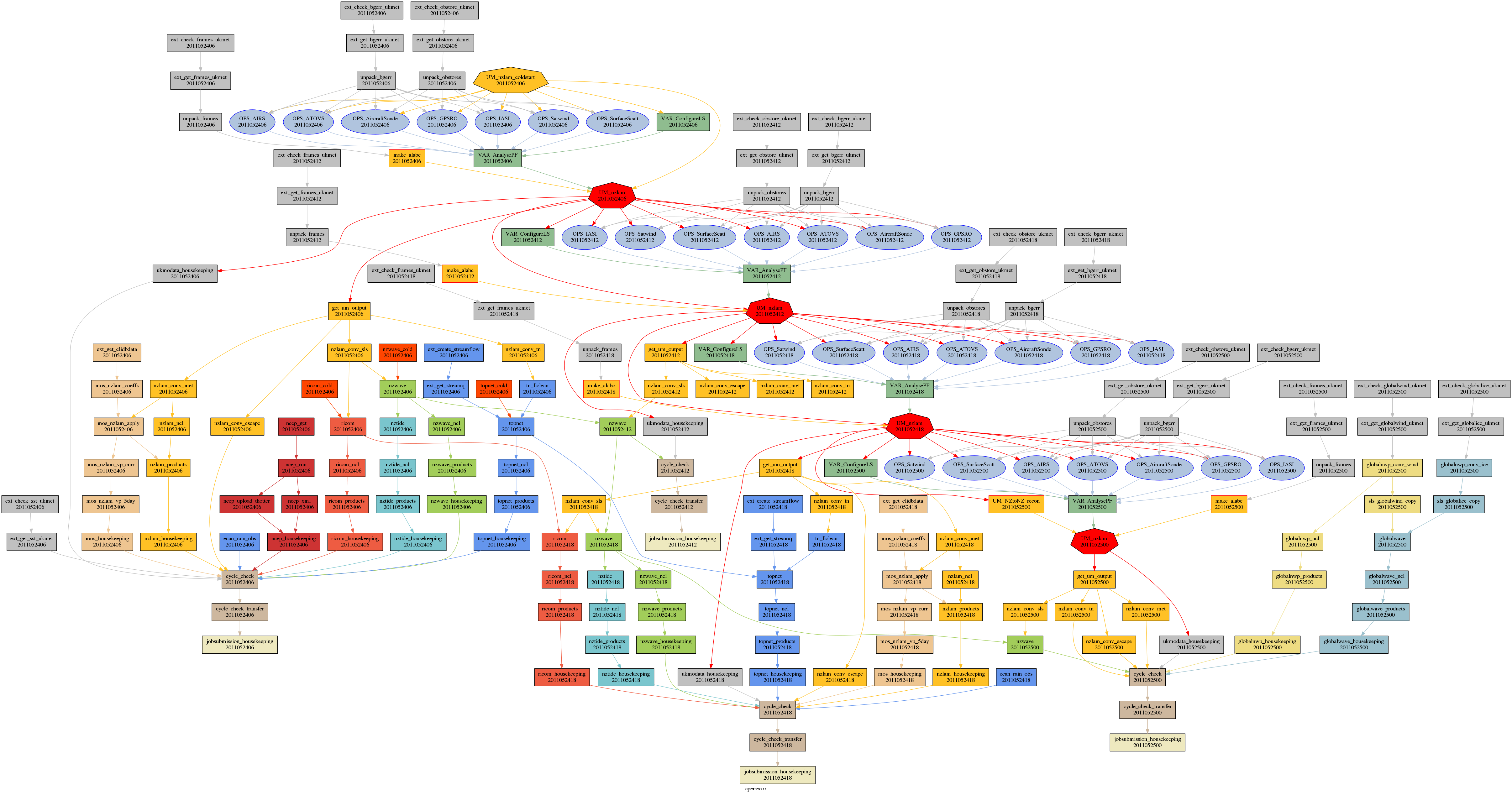

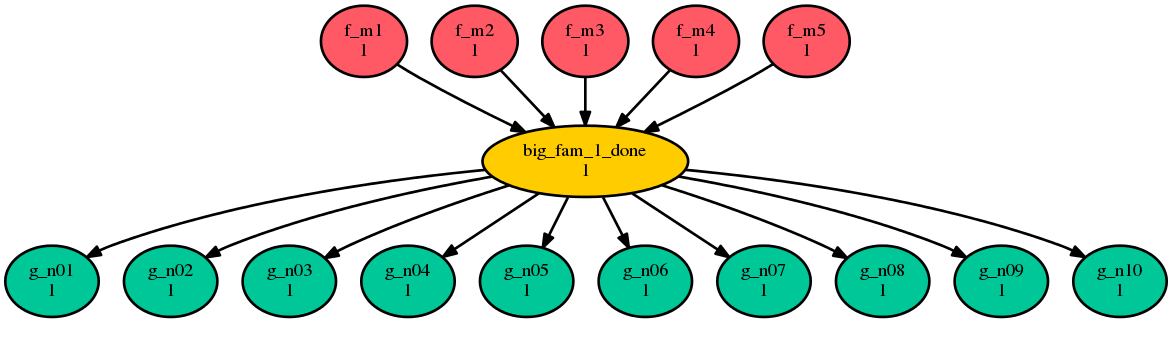

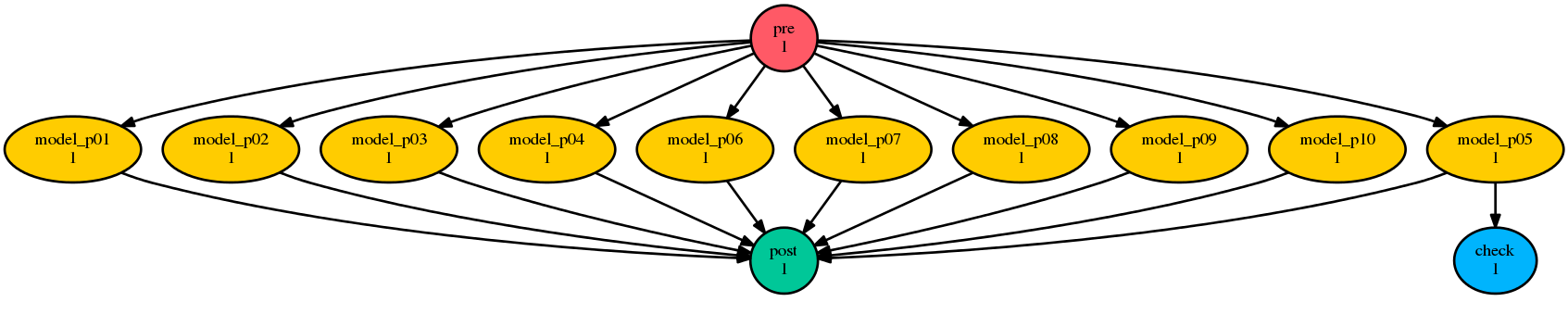

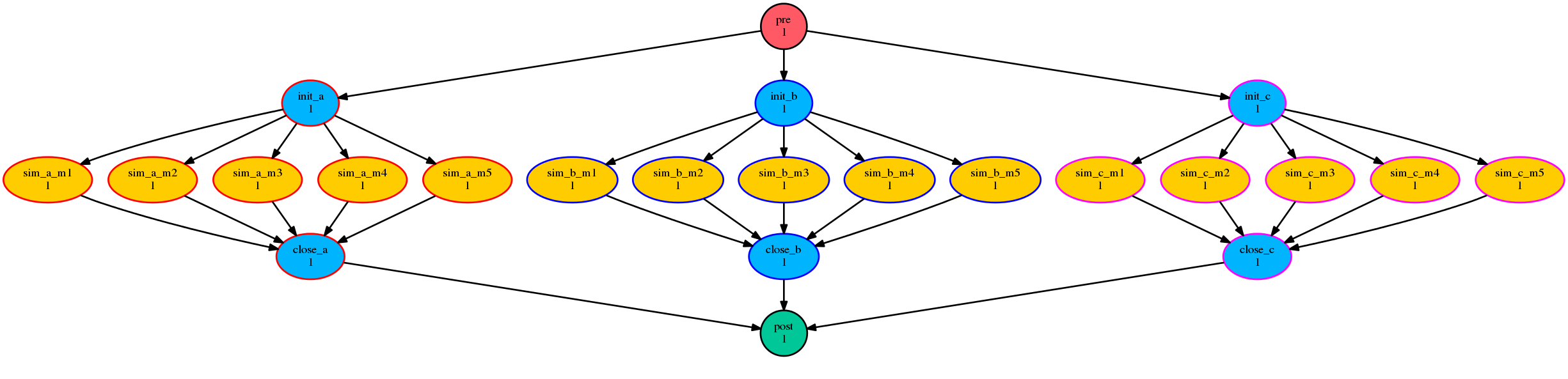

Fig. 13 A large-ish suite graphed by cylc.

3. Installation¶

Cylc runs on Linux. It is tested quite thoroughly on modern RHEL and Ubuntu distros. Some users have also managed to make it work on other Unix variants including Apple OS X, but they are not officially tested and supported.

3.1. Third-Party Software Packages¶

Python 2 >= 2.7 is required. Python 2 >= 2.7.9 is

recommended for the best security. Python 2 should

already be installed in your Linux system.

For Cylc’s HTTPS communications layer:

- OpenSSL

- pyOpenSSL

- python-requests

- python-urllib3 - should be bundled with python-requests

The following packages are highly recommended, but are technically optional as you can construct and run suites without dependency graph visualisation or the Cylc GUIs:

PyGTK - Python bindings for the GTK+ GUI toolkit.

Note

PyGTK typically comes with your system Python 2 version. It is allegedly quite difficult to install if you need to do so for another Python version. At time of writing, for instance, there are no functional PyGTK conda packages available.

Note that we need to do ``import gtk`` in Python, not ``import pygtk``.

In Centos 7.6, for example, the Cylc GUIs run “out of the box” with the system-installed Python 2.7.5. Under the hood, the Python “gtk” package is provided by the “pygtk2” yum package. (The “pygtk” Python module, which we don’t need, is supplied by the “pygobject2” yum package).

Graphviz - graph layout engine (tested 2.36.0)

Pygraphviz - Python Graphviz interface (tested 1.2). To build this you may need some devel packages too:

- python-devel

- graphviz-devel

Note

The

cylc graphcommand for static workflow visualization requires PyGTK, but we provide a separatecylc ref-graphcommand to print out a simple text-format “reference graph” without PyGTK.

The Cylc Review service does not need any additional packages.

The following packages are necessary for running all the tests in Cylc:

To generate the HTML User Guide, you will need:

- Sphinx of compatible version,

>=1.5.3 and<=1.7.9.

In most modern Linux distributions all of the software above can be installed via the system package manager. Otherwise download packages manually and follow their native installation instructions. To check that all packages are installed properly:

$ cylc check-software

Checking your software...

Individual results:

===============================================================================

Package (version requirements) Outcome (version found)

===============================================================================

*REQUIRED SOFTWARE*

Python (2.7+, <3).....................FOUND & min. version MET (2.7.12.final.0)

*OPTIONAL SOFTWARE for the GUI & dependency graph visualisation*

Python:pygraphviz (any)...........................................NOT FOUND (-)

graphviz (any)...................................................FOUND (2.26.0)

Python:pygtk (2.0+)...............................................NOT FOUND (-)

*OPTIONAL SOFTWARE for the HTTPS communications layer*

Python:requests (2.4.2+)......................FOUND & min. version MET (2.11.1)

Python:urllib3 (any)..............................................NOT FOUND (-)

Python:OpenSSL (any)..............................................NOT FOUND (-)

*OPTIONAL SOFTWARE for the configuration templating*

Python:EmPy (any).................................................NOT FOUND (-)

*OPTIONAL SOFTWARE for the HTML documentation*

Python:sphinx (1.5.3+).........................FOUND & min. version MET (1.7.0)

===============================================================================

Summary:

****************************

Core requirements: ok

Full-functionality: not ok

****************************

If errors are reported then the packages concerned are either not installed or not in your Python search path.

Note

cylc check-software has become quite trivial as we’ve removed or

bundled some former dependencies, but in future we intend to make it

print a comprehensive list of library versions etc. to include in with

bug reports.

To check for specific packages only, supply these as arguments to the

check-software command, either in the form used in the output of

the bare command, without any parent package prefix and colon, or

alternatively all in lower-case, should the given form contain capitals. For

example:

$ cylc check-software graphviz Python urllib3

With arguments, check-software provides an exit status indicating a collective pass (zero) or a failure of that number of packages to satisfy the requirements (non-zero integer).

3.2. Software Bundled With Cylc¶

Cylc bundles several third party packages which do not need to be installed separately.

- cherrypy 6.0.2 (slightly modified): a pure

Python HTTP framework that we use as a web server for communication between

server processes (suite server programs) and client programs (running tasks,

GUIs, CLI commands).

- Client communication is via the Python requests library if available (recommended) or else pure Python via urllib2.

- Jinja2 2.10: a full featured template engine for Python, and its dependency MarkupSafe 0.23; both BSD licensed.

- the xdot graph viewer (modified), LGPL licensed.

3.3. Installing Cylc¶

Cylc releases can be downloaded from GitHub.

The wrapper script usr/bin/cylc should be installed to

the system executable search path (e.g. /usr/local/bin/) and

modified slightly to point to a location such as /opt where

successive Cylc releases will be unpacked side by side.

To install Cylc, unpack the release tarball in the right location, e.g.

/opt/cylc-7.8.2, type make inside the release

directory, and set site defaults - if necessary - in a site global config file

(below).

Make a symbolic link from cylc to the latest installed version:

ln -s /opt/cylc-7.8.2 /opt/cylc. This will be invoked by the

central wrapper if a specific version is not requested. Otherwise, the

wrapper will attempt to invoke the Cylc version specified in

$CYLC_VERSION, e.g. CYLC_VERSION=7.8.2. This variable

is automatically set in task job scripts to ensure that jobs use the same Cylc

version as their parent suite server program. It can also be set by users,

manually or in login scripts, to fix the Cylc version in their environment.

Installing subsequent releases is just a matter of unpacking the new tarballs

next to the previous releases, running make in them, and copying

in (possibly with modifications) the previous site global config file.

3.3.1. Local User Installation¶

It is easy to install Cylc under your own user account if you don’t have

root or sudo access to the system: just put the central Cylc wrapper in

$HOME/bin/ (making sure that is in your $PATH) and

modify it to point to a directory such as $HOME/cylc/ where you

will unpack and install release tarballs. Local installation of third party

dependencies like Graphviz is also possible, but that depends on the particular

installation methods used and is outside of the scope of this document.

3.3.2. Create A Site Config File¶

Site and user global config files define some important parameters that affect all suites, some of which may need to be customized for your site. See Global (Site, User) Configuration Files for how to generate an initial site file and where to install it. All legal site and user global config items are defined in Global (Site, User) Config File Reference.

3.3.3. Configure Site Environment on Job Hosts¶

If your users submit task jobs to hosts other than the hosts they use to run

their suites, you should ensure that the job hosts have the correct environment

for running cylc. A cylc suite generates task job scripts that normally invoke

bash -l, i.e. it will invoke bash as a login shell to run the job

script. Users and sites should ensure that their bash login profiles are able

to set up the correct environment for running cylc and their task jobs.

Your site administrator may customise the environment for all task jobs by

adding a <cylc-dir>/etc/job-init-env.sh file and populate it with the

appropriate contents. If customisation is still required, you can add your own

${HOME}/.cylc/job-init-env.sh file and populate it with the

appropriate contents.

${HOME}/.cylc/job-init-env.sh<cylc-dir>/etc/job-init-env.sh

The job will attempt to source the first of these files it finds to set up its environment.

3.3.4. Configuring Cylc Review Under Apache¶

The Cylc Review web service displays suite job logs and other information in

web pages - see Viewing Suite Logs in a Web Browser: Cylc Review and

Fig. 14. It can run under a WSGI server (e.g.

Apache with mod_wsgi) as a service for all users, or as an ad hoc

service under your own user account.

To run Cylc Review under Apache, install mod_wsgi and configure it

as follows, with paths modified appropriately:

# Apache mod_wsgi config file, e.g.:

# Red Hat Linux: /etc/httpd/conf.d/cylc-wsgi.conf

# Ubuntu Linux: /etc/apache2/mods-available/wsgi.conf

# E.g. for /opt/cylc-7.8.1/

WSGIPythonPath /opt/cylc-7.8.1/lib

WSGIScriptAlias /cylc-review /opt/cylc-7.8.1/bin/cylc-review

(Note the WSGIScriptAlias determines the service URL under the

server root).

And allow Apache access to the Cylc library:

# Directory access, in main Apache config file, e.g.:

# Red Hat Linux: /etc/httpd/conf/httpd.conf

# Ubuntu Linux: /etc/apache2/apache2.conf

# E.g. for /opt/cylc-7.8.1/

<Directory /opt/cylc-7.8.1/>

AllowOverride None

Require all granted

</Directory>

The host running the Cylc Review web service, and the service itself (or the

user that it runs as) must be able to view the ~/cylc-run directory

of all Cylc users.

Use the web server log, e.g. /var/log/httpd/ or /var/log/apache2/, to

debug problems.

3.4. Automated Tests¶

The cylc test battery is primarily intended for developers to check that changes to the source code don’t break existing functionality.

Note

Some test failures can be expected to result from suites timing out,

even if nothing is wrong, if you run too many tests in parallel. See

cylc test-battery --help.

4. Cylc Terminology¶

4.1. Jobs and Tasks¶

A job is a program or script that runs on a computer, and a task is a workflow abstraction - a node in the suite dependency graph - that represents a job.

4.2. Cycle Points¶

A cycle point is a particular date-time (or integer) point in a sequence of date-time (or integer) points. Each cylc task has a private cycle point and can advance independently to subsequent cycle points. It may sometimes be convenient, however, to refer to the “current cycle point” of a suite (or the previous or next one, etc.) with reference to a particular task, or in the sense of all tasks instances that “belong to” a particular cycle point. But keep in mind that different tasks may pass through the “current cycle point” (etc.) at different times as the suite evolves.

5. Workflows For Cycling Systems¶

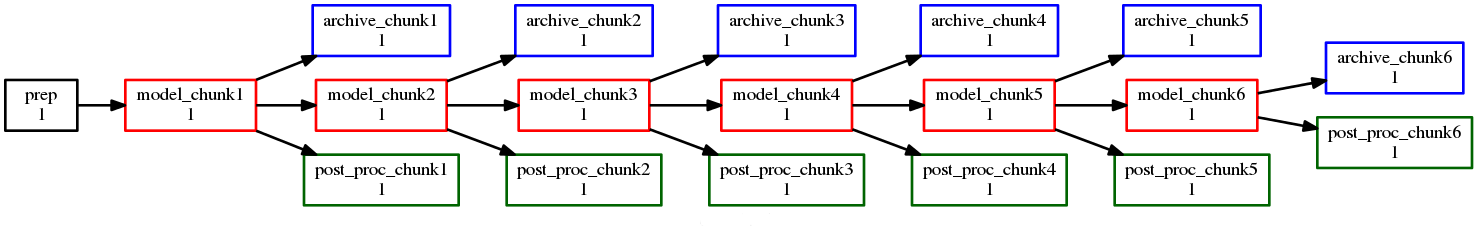

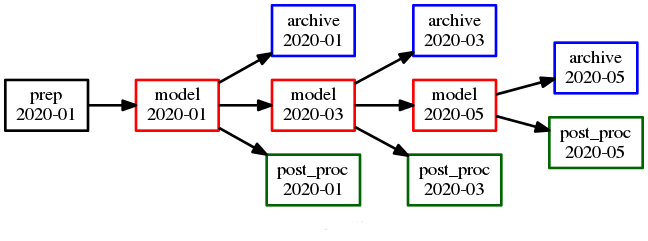

A model run and associated processing may need to be cycled for the following reasons:

- In real time forecasting systems, a new forecast may be initiated at regular intervals when new real time data comes in.

- It may be convenient (or necessary, e.g. due to batch scheduler queue limits) to split single long model runs into many smaller chunks, each with associated pre- and post-processing workflows.

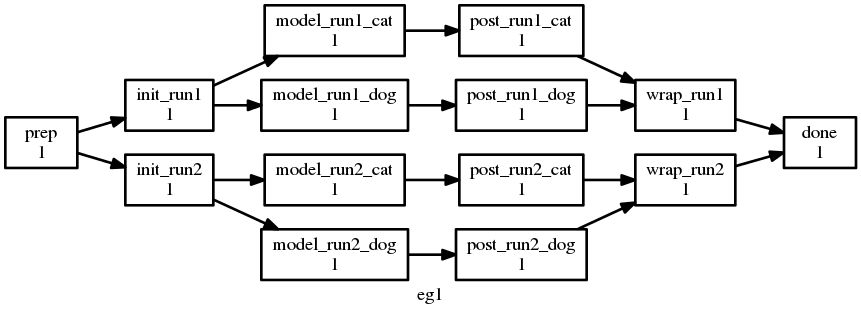

Cylc provides two ways of constructing workflows for cycling systems: cycling workflows and parameterized tasks.

5.1. Cycling Workflows¶

This is cylc’s classic cycling mode as described in the Introduction. Each

instance of a cycling job is represented by a new instance of the same task,

with a new cycle point. The suite configuration defines patterns for

extending the workflow on the fly, so it can keep running indefinitely if

necessary. For example, to cycle model.exe on a monthly sequence we

could define a single task model, an initial cycle point, and a

monthly sequence. Cylc then generates the date-time sequence and creates a new

task instance for each cycle point as it comes up. Workflow dependencies are

defined generically with respect to the “current cycle point” of the tasks

involved.

This is the only sensible way to run very large suites or operational suites that need to continue cycling indefinitely. The cycling is configured with standards-based ISO 8601 date-time recurrence expressions. Multiple cycling sequences can be used at once in the same suite. See Scheduling - Dependency Graphs.

5.2. Parameterized Tasks as a Proxy for Cycling¶

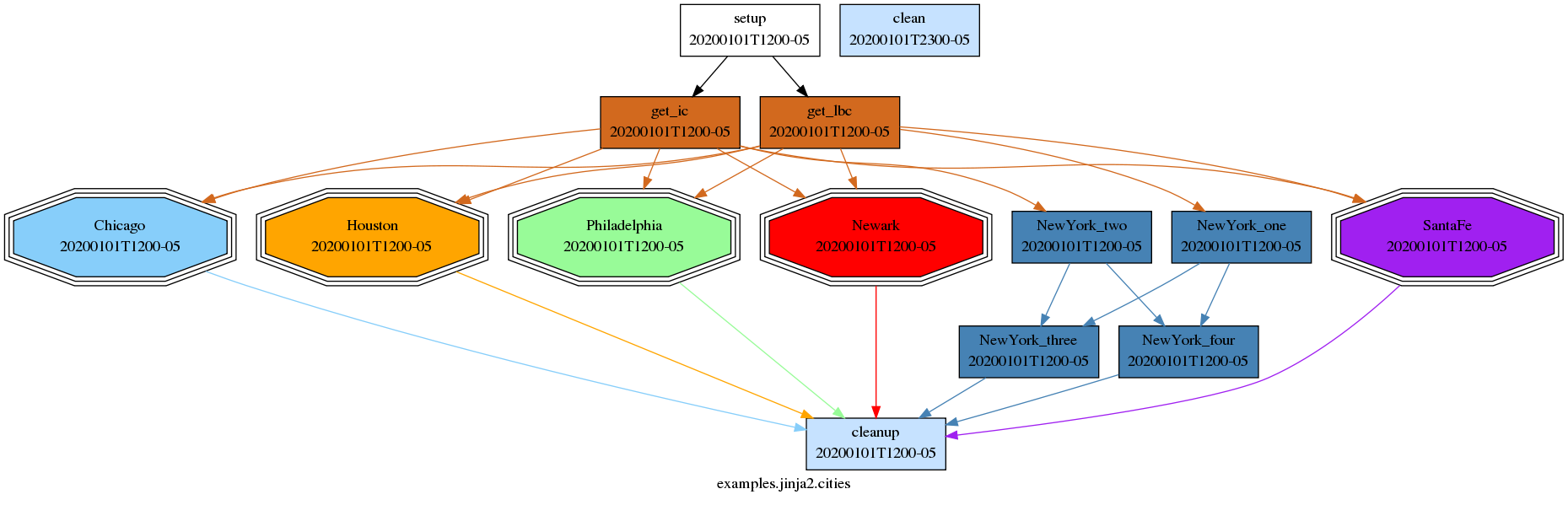

It is also possible to run cycling jobs with a pre-defined static workflow in which each instance of a cycling job is represented by a different task: as far as the abstract workflow is concerned there is no cycling. The sequence of tasks can be constructed efficiently, however, using cylc’s built-in suite parameters (Parameterized Cycling) or explicit Jinja2 loops (Jinja2).

For example, to run model.exe 12 times on a monthly cycle we could

loop over an integer parameter R = 0, 1, 2, ..., 11 to define tasks

model-R0, model-R1, model-R2, ...model-R11, and the parameter

values could be multiplied by the interval P1M (one month) to get

the start point for the corresponding model run.

This method is only good for smaller workflows of finite duration because every single task has to be mapped out in advance, and cylc has to be aware of all of them throughout the entire run. Additionally Cylc’s cycling workflow capabilities (above) are more powerful, more flexible, and generally easier to use (Cylc will generate the cycle point date-times for you, for instance), so that is the recommended way to drive most cycling systems.

The primary use for parameterized tasks in cylc is to generate ensembles and other groups of related tasks at the same cycle point, not as a proxy for cycling.

5.3. Mixed Cycling Workflows¶

For completeness we note that parameterized cycling can be used within a cycling workflow. For example, in a daily cycling workflow long (daily) model runs could be split into four shorter runs by parameterized cycling. A simpler six-hourly cycling workflow should be considered first, however.

6. Global (Site, User) Configuration Files¶

Cylc site and user global configuration files contain settings that affect all suites. Some of these, such as the range of network ports used by cylc, should be set at site level. Legal items, values, and system defaults are documented in (Global (Site, User) Config File Reference).

# cylc site global config file

<cylc-dir>/etc/global.rc

Others, such as the preferred text editor for suite configurations, can be overridden by users,

# cylc user global config file

~/.cylc/$(cylc --version)/global.rc # e.g. ~/.cylc/7.8.2/global.rc

The file <cylc-dir>/etc/global.rc.eg contains instructions on how

to generate and install site and user global config files:

#------------------------------------------------------------------------------

# How to create a site or user global.rc config file.

#------------------------------------------------------------------------------

# The "cylc get-global-config" command prints - in valid global.rc format -

# system global defaults, overridden by site global settings (if any),

# overridden by user global settings (if any).

#

# Therefore, to generate a new global config file, do this:

# % cylc get-global-config > global.rc

# edit it as needed and install it in the right location (below).

#

# For legal config items, see the User Guide's global.rc reference appendix.

#

# FILE LOCATIONS:

#----------------------

# SITE: delete or comment out items that you do not need to change (otherwise

# you may unwittingly override future changes to system defaults).

#

# The SITE FILE LOCATION is [cylc-dir]/etc/global.rc, where [cylc-dir] is your

# install location, e.g. /opt/cylc/cylc-7.8.2.

#

# FORWARD COMPATIBILITY: The site global.rc file must be kept in the source

# installation (i.e. it is version specific) because older versions of Cylc

# will not understand newer global config items. WHEN YOU INSTALL A NEW VERSION

# OF CYLC, COPY OVER YOUR OLD SITE GLOBAL CONFIG FILE AND ADD TO IT IF NEEDED.

#

#----------------------

# USER: delete or comment out items that you do not need to change (otherwise

# you may unwittingly override future changes to site or system defaults).

#

# The USER FILE LOCATIONS are:

# 1) ~/.cylc/<CYLC-VERSION>/global.rc # e.g. ~/.cylc/7.8.2/global.rc

# 2) ~/.cylc/global.rc

# If the first lcoation is not found, the second will be checked.

#

# The version-specific location is preferred - see FORWARD COMPATIBILITY above.

# WHEN YOU FIRST USE A NEW VERSION OF CYLC, COPY OVER YOUR OLD USER GLOBAL

# CONFIG FILE AND TO IT IF NEEDED. However, if you only set a items that don't

# change from one version to the next, you may be OK with the second location.

#------------------------------------------------------------------------------

7. Tutorial¶

This section provides a hands-on tutorial introduction to basic cylc functionality.

7.1. User Config File¶

Some settings affecting cylc’s behaviour can be defined in site and user global config files. For example, to choose the text editor invoked by cylc on suite configurations:

# $HOME/.cylc/$(cylc --version)/global.rc

[editors]

terminal = vim

gui = gvim -f

- For more on site and user global config files see Global (Site, User) Configuration Files and Global (Site, User) Config File Reference.

7.1.1. Configure Environment on Job Hosts¶

See Configure Site Environment on Job Hosts for information.

7.2. User Interfaces¶

You should have access to the cylc command line (CLI) and graphical (GUI) user interfaces once cylc has been installed as described in Section Installing Cylc.

7.2.1. Command Line Interface (CLI)¶

The command line interface is unified under a single top level

cylc command that provides access to many sub-commands

and their help documentation.

$ cylc help # Top level command help.

$ cylc run --help # Example command-specific help.

Command help transcripts are printed in Command Reference and are available from the GUI Help menu.

Cylc is scriptable - the error status returned by commands can be relied on.

7.2.2. Graphical User Interface (GUI)¶

The cylc GUI covers the same functionality as the CLI, but it has more sophisticated suite monitoring capability. It can start and stop suites, or connect to suites that are already running; in either case, shutting down the GUI does not affect the suite itself.

$ gcylc & # or:

$ cylc gui & # Single suite control GUI.

$ cylc gscan & # Multi-suite monitor GUI.

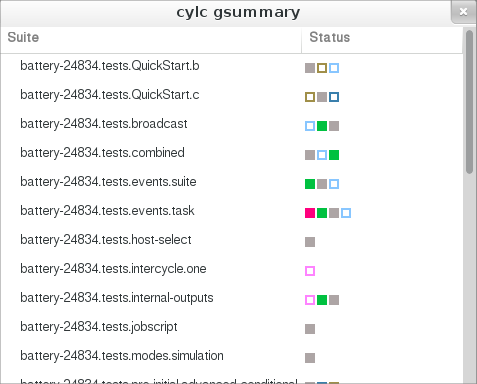

Clicking on a suite in gscan, shown in Fig. 12, opens a gcylc instance for it.

7.3. Suite Configuration¶

Cylc suites are defined by extended-INI format suite.rc

files (the main file format extension is section nesting). These reside

in suite configuration directories that may also contain a

bin directory and any other suite-related files.

- For more on the suite configuration file format, see Suite Configuration and Suite.rc Reference.

7.4. Suite Registration¶

Suite registration creates a run directory (under ~/cylc-run/ by

default) and populates it with authentication files and a symbolic link to a

suite configuration directory. Cylc commands that parse suites can take

the file path or the suite name as input. Commands that interact with running

suites have to target the suite by name.

# Target a suite by file path:

$ cylc validate /path/to/my/suite/suite.rc

$ cylc graph /path/to/my/suite/suite.rc

# Register a suite:

$ cylc register my.suite /path/to/my/suite/

# Target a suite by name:

$ cylc graph my.suite

$ cylc validate my.suite

$ cylc run my.suite

$ cylc stop my.suite

# etc.

7.5. Suite Passphrases¶

Registration (above) also generates a suite-specific passphrase file under

.service/ in the suite run directory. It is loaded by the suite

server program at start-up and used to authenticate connections from client

programs.

Possession of a suite’s passphrase file gives full control over it. Without it, the information available to a client is determined by the suite’s public access privilege level.

For more on connection authentication, suite passphrases, and public access, see Client-Server Interaction.

7.6. Import The Example Suites¶

Run the following command to copy cylc’s example suites and register them for your own use:

$ cylc import-examples /tmp

7.7. Rename The Imported Tutorial Suites¶

Suites can be renamed by simply renaming (i.e. moving) their run directories.

Make the tutorial suite names shorter, and print their locations with

cylc print:

$ mv ~/cylc-run/examples/$(cylc --version)/tutorial ~/cylc-run/tut

$ cylc print -ya tut

tut/oneoff/jinja2 | /tmp/cylc-examples/7.0.0/tutorial/oneoff/jinja2

tut/cycling/two | /tmp/cylc-examples/7.0.0/tutorial/cycling/two

tut/cycling/three | /tmp/cylc-examples/7.0.0/tutorial/cycling/three

# ...

See cylc print --help for other display options.

7.8. Suite Validation¶

Suite configurations can be validated to detect syntax (and other) errors:

# pass:

$ cylc validate tut/oneoff/basic

Valid for cylc-6.0.0

$ echo $?

0

# fail:

$ cylc validate my/bad/suite

Illegal item: [scheduling]special tusks

$ echo $?

1

7.9. Hello World in Cylc¶

suite: tut/oneoff/basic

Here’s the traditional Hello World program rendered as a cylc suite:

[meta]

title = "The cylc Hello World! suite"

[scheduling]

[[dependencies]]

graph = "hello"

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

Cylc suites feature a clean separation of scheduling configuration,

which determines when tasks are ready to run; and runtime

configuration, which determines what to run (and where and

how to run it) when a task is ready. In this example the

[scheduling] section defines a single task called

hello that triggers immediately when the suite starts

up. When the task finishes the suite shuts down. That this is a

dependency graph will be more obvious when more tasks are added.

Under the [runtime] section the

script item defines a simple inlined

implementation for hello: it sleeps for ten seconds,

then prints Hello World!, and exits. This ends up in a job script

generated by cylc to encapsulate the task (below) and,

thanks to some defaults designed to allow quick

prototyping of new suites, it is submitted to run as a background job on

the suite host. In fact cylc even provides a default task implementation

that makes the entire [runtime] section technically optional:

[meta]

title = "The minimal complete runnable cylc suite"

[scheduling]

[[dependencies]]

graph = "foo"

# (actually, 'title' is optional too ... and so is this comment)

(the resulting dummy task just prints out some identifying information and exits).

7.10. Editing Suites¶

The text editor invoked by Cylc on suite configurations is determined by cylc site and user global config files, as shown above in User Interfaces. Check that you have renamed the tutorial examples suites as described just above and open the Hello World suite in your text editor:

$ cylc edit tut/oneoff/basic # in-terminal

$ cylc edit -g tut/oneoff/basic & # or GUI

Alternatively, start gcylc on the suite:

$ gcylc tut/oneoff/basic &

and choose Suite -> Edit from the menu.

The editor will be invoked from within the suite configuration directory

for easy access to other suite files (in this case there are none). There are

syntax highlighting control files for several text editors under

<cylc-dir>/etc/syntax/; see in-file comments for installation

instructions.

7.11. Running Suites¶

7.11.1. CLI¶

Run tut/oneoff/basic using the cylc run command.

As a suite runs detailed timestamped information is written to a suite log

and progress can be followed with cylc’s suite monitoring tools (below).

By default a suite server program daemonizes after printing a short message so

that you can exit the terminal or even log out without killing the suite:

$ cylc run tut/oneoff/basic

._.

| | The Cylc Suite Engine [7.0.0]

._____._. ._| |_____. Copyright (C) NIWA & British Crown (Met Office) & Contributors.

| .___| | | | | .___| _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

| !___| !_! | | !___. This program comes with ABSOLUTELY NO WARRANTY;

!_____!___. |_!_____! see `cylc warranty`. It is free software, you

.___! | are welcome to redistribute it under certain

!_____! conditions; see `cylc conditions`.

*** listening on https://nwp-1:43027/ ***

To view suite server program contact information:

$ cylc get-suite-contact tut/oneoff/basic

Other ways to see if the suite is still running:

$ cylc scan -n '\btut/oneoff/basic\b' nwp-1

$ cylc ping -v --host=nwp-1 tut/oneoff/basic

$ ps h -opid,args 123456 # on nwp-1

If you’re quick enough (this example only takes 10-15 seconds to run) the

cylc scan command will detect the running suite:

$ cylc scan

tut/oneoff/basic oliverh@nwp-1:43027

Note

You can use the --no-detach and --debug options

to cylc-run to prevent the suite from daemonizing (i.e. to make

it stay attached to your terminal until it exits).

When a task is ready cylc generates a job script to run it, by default as a background jobs on the suite host. The job process ID is captured, and job output is directed to log files in standard locations under the suite run directory.

Log file locations relative to the suite run directory look like

job/1/hello/01/ where the first digit is the cycle point of

the task hello (for non-cycling tasks this is just 1); and the

final 01 is the submit number (so that job logs do not get

overwritten if a job is resubmitted for any reason).

The suite shuts down automatically once all tasks have succeeded.

7.11.2. GUI¶

The cylc GUI can start and stop suites, or (re)connect to suites that are already running:

$ cylc gui tut/oneoff/basic &

Use the tool bar Play button, or the Control -> Run menu item, to

run the suite again. You may want to alter the suite configuration slightly

to make the task take longer to run. Try right-clicking on the

hello task to view its output logs. The relative merits of the three

suite views - dot, text, and graph - will be more apparent later when we

have more tasks. Closing the GUI does not affect the suite itself.

7.12. Remote Suites¶

Suites can run on localhost or on a remote host.

To start up a suite on a given host, specify it explicitly via the

--host= option to a run or restart command.

Otherwise, Cylc selects the best host to start up on from allowed

run hosts as specified in the global config under

[suite servers], which defaults to localhost. Should there be

more than one allowed host set, the most suitable is determined

according to the settings specified under [[run host select]],

namely exclusion of hosts not meeting suitability thresholds, if

provided, then ranking according to the given rank method.

7.13. Discovering Running Suites¶

Suites that are currently running can be detected with command line or GUI tools:

# list currently running suites and their port numbers:

$ cylc scan

tut/oneoff/basic oliverh@nwp-1:43001

# GUI summary view of running suites:

$ cylc gscan &

The scan GUI is shown in Fig. 12; clicking on a suite in it opens gcylc.

7.14. Task Identifiers¶

At run time, task instances are identified by their name (see Task and Namespace Names), which is determined entirely by the suite configuration, and a cycle point which is usually a date-time or an integer:

foo.20100808T00Z # a task with a date-time cycle point

bar.1 # a task with an integer cycle point (could be non-cycling)

Non-cycling tasks usually just have the cycle point 1, but this

still has to be used to target the task instance with cylc commands.

7.15. Job Submission: How Tasks Are Executed¶

suite: tut/oneoff/jobsub

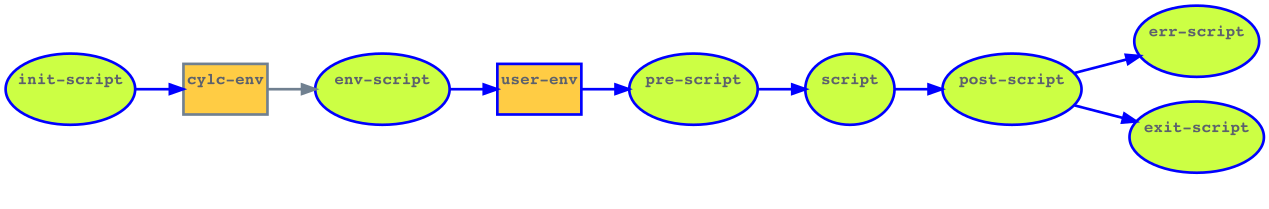

Task job scripts are generated by cylc to wrap the task implementation specified in the suite configuration (environment, script, etc.) in error trapping code, messaging calls to report task progress back to the suite server program, and so forth. Job scripts are written to the suite job log directory where they can be viewed alongside the job output logs. They can be accessed at run time by right-clicking on the task in the cylc GUI, or printed to the terminal:

$ cylc cat-log tut/oneoff/basic hello.1

This command can also print the suite log (and stdout and stderr for suites

in daemon mode) and task stdout and stderr logs (see

cylc cat-log --help).

A new job script can also be generated on the fly for inspection:

$ cylc jobscript tut/oneoff/basic hello.1

Take a look at the job script generated for hello.1 during

the suite run above. The custom scripting should be clearly visible

toward the bottom of the file.

The hello task in the first tutorial suite defaults to

running as a background job on the suite host. To submit it to the Unix

at scheduler instead, configure its job submission settings

as in tut/oneoff/jobsub:

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

[[[job]]]

batch system = at

Run the suite again after checking that at is running on your

system.

Cylc supports a number of different batch systems. Tasks

submitted to external batch queuing systems like at,

PBS, SLURM, Moab, or LoadLeveler, are displayed as

submitted in the cylc GUI until they start executing.

- For more on task job scripts, see Task Job Scripts.

- For more on batch systems, see Supported Job Submission Methods.

7.16. Locating Suite And Task Output¶

If the --no-detach option is not used, suite stdout and

stderr will be directed to the suite run directory along with the

time-stamped suite log file, and task job scripts and job logs

(task stdout and stderr). The default suite run directory location is

$HOME/cylc-run:

$ tree $HOME/cylc-run/tut/oneoff/basic/

|-- .service # location of run time service files

| |-- contact # detail on how to contact the running suite

| |-- db # private suite run database

| |-- passphrase # passphrase for client authentication

| |-- source # symbolic link to source directory

| |-- ssl.cert # SSL certificate for the suite server

| `-- ssl.pem # SSL private key

|-- cylc-suite.db # back compat symlink to public suite run database

|-- share # suite share directory (not used in this example)

|-- work # task work space (sub-dirs are deleted if not used)

| `-- 1 # task cycle point directory (or 1)

| `-- hello # task work directory (deleted if not used)

|-- log # suite log directory

| |-- db # public suite run database

| |-- job # task job log directory

| | `-- 1 # task cycle point directory (or 1)

| | `-- hello # task name

| | |-- 01 # task submission number

| | | |-- job # task job script

| | | `-- job-activity.log # task job activity log

| | | |-- job.err # task stderr log

| | | |-- job.out # task stdout log

| | | `-- job.status # task status file

| | `-- NN -> 01 # symlink to latest submission number

| `-- suite # suite server log directory

| |-- err # suite server stderr log (daemon mode only)

| |-- out # suite server stdout log (daemon mode only)

| `-- log # suite server event log (timestamped info)

The suite run database files, suite environment file,

and task status files are used internally by cylc. Tasks execute in

private work/ directories that are deleted automatically

if empty when the task finishes. The suite

share/ directory is made available to all tasks (by

$CYLC_SUITE_SHARE_DIR) as a common share space. The task submission

number increments from 1 if a task retries; this is used as a

sub-directory of the log tree to avoid overwriting log files from earlier

job submissions.

The top level run directory location can be changed in site and user config files if necessary, and the suite share and work locations can be configured separately because of the potentially larger disk space requirement.

Task job logs can be viewed by right-clicking on tasks in the gcylc

GUI (so long as the task proxy is live in the suite), manually

accessed from the log directory (of course), or printed to the terminal

with the cylc cat-log command:

# suite logs:

$ cylc cat-log tut/oneoff/basic # suite event log

$ cylc cat-log -o tut/oneoff/basic # suite stdout log

$ cylc cat-log -e tut/oneoff/basic # suite stderr log

# task logs:

$ cylc cat-log tut/oneoff/basic hello.1 # task job script

$ cylc cat-log -o tut/oneoff/basic hello.1 # task stdout log

$ cylc cat-log -e tut/oneoff/basic hello.1 # task stderr log

- For a web-based interface to suite and task logs (and much more), see Rose in Suite Storage, Discovery, Revision Control, and Deployment.

- For more on environment variables supplied to tasks, such as

$CYLC_SUITE_SHARE_DIR, see Task Execution Environment.

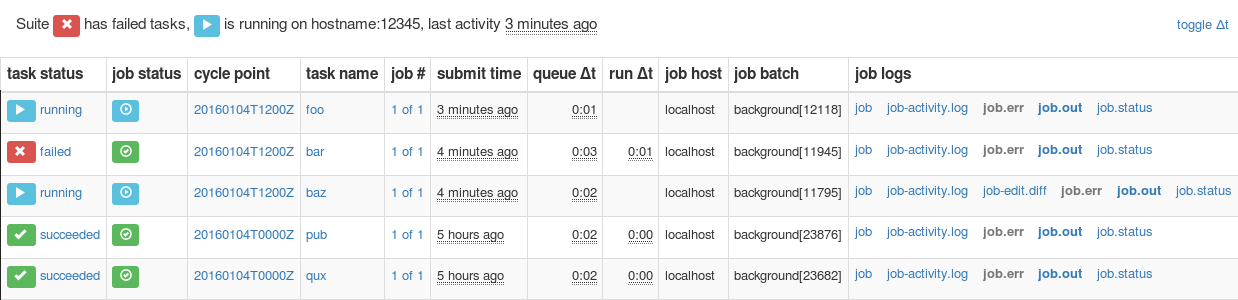

7.17. Viewing Suite Logs in a Web Browser: Cylc Review¶

The Cylc Review web service displays suite job logs and other information in

web pages, as shown in Fig. 14. It can run under a

WSGI server (e.g. Apache with mod_wsgi) as a service for all

users, or as an ad hoc service under your own user account.

If a central Cylc Review service has been set up at your site (e.g. as

described in Configuring Cylc Review Under Apache) the URL will typically be

something like http://<server>/cylc-review/.

Fig. 14 Screenshot of a Cylc Review web page

Otherwise, to start an ad hoc Cylc Review service to view your own suite logs (or those of others, if you have read access to them), run:

setsid cylc review start 0</dev/null 1>/dev/null 2>&1 &

The service should start at http://<server>:8080 (the port number

can optionally be set on the command line). Service logs are written to

~/.cylc/cylc-review*. Run cylc review to view

status information, and cylc review stop to stop the service.

7.18. Remote Tasks¶

suite: tut/oneoff/remote

The hello task in the first two tutorial suites defaults to

running on the suite host Remote Suites. To make it run on a different

host instead change its runtime configuration as in tut/oneoff/remote:

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

[[[remote]]]

host = server1.niwa.co.nz

In general, a task remote is a user account, other than the account running the suite server program, where a task job is submitted to run. It can be on the same machine running the suite or on another machine.

A task remote account must satisfy several requirements:

- Non-interactive ssh must be enabled from the account running the suite server program to the account for submitting (and managing) the remote task job.

- Network settings must allow communication back from the remote task job to the suite, either by network ports or ssh, unless the last-resort one way task polling communication method is used.

- Cylc must be installed and runnable on the task remote account. Other software dependencies like graphviz are not required there.

- Any files needed by a remote task must be installed on the task

host. In this example there is nothing to install because the

implementation of

hellois inlined in the suite configuration and thus ends up entirely contained within the task job script.

If your username is different on the task host, you can add a User

setting for the relevant host in your ~/.ssh/config.

If you are unable to do so, the [[[remote]]] section also supports an

owner=username item.

If you configure a task account according to the requirements cylc will invoke itself on the remote account (with a login shell by default) to create log directories, transfer any essential service files, send the task job script over, and submit it to run there by the configured batch system.

Remote task job logs are saved to the suite run directory on the task remote, not on the account running the suite. They can be retrieved by right-clicking on the task in the GUI, or to have cylc pull them back to the suite account automatically do this:

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

[[[remote]]]

host = server1.niwa.co.nz

retrieve job logs = True

This suite will attempt to rsync job logs from the remote

host each time a task job completes.

Some batch systems have considerable delays between the time when the job completes and when it writes the job logs in its normal location. If this is the case, you can configure an initial delay and retry delays for job log retrieval by setting some delays. E.g.:

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

[[[remote]]]

host = server1.niwa.co.nz

retrieve job logs = True

# Retry after 10 seconds, 1 minute and 3 minutes

retrieve job logs retry delays = PT10S, PT1M, PT3M

Finally, if the disk space of the suite host is limited, you may want to set

[[[remote]]]retrieve job logs max size=SIZE. The value of SIZE can

be anything that is accepted by the --max-size=SIZE option of the

rsync command. E.g.:

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

[[[remote]]]

host = server1.niwa.co.nz

retrieve job logs = True

# Don't get anything bigger than 10MB

retrieve job logs max size = 10M

It is worth noting that cylc uses the existence of a job’s job.out

or job.err in the local file system to indicate a successful job

log retrieval. If retrieve job logs max size=SIZE is set and both

job.out and job.err are bigger than SIZE

then cylc will consider the retrieval as failed. If retry delays are specified,

this will trigger some useless (but harmless) retries. If this occurs

regularly, you should try the following:

- Reduce the verbosity of STDOUT or STDERR from the task.

- Redirect the verbosity from STDOUT or STDERR to an alternate log file.

- Adjust the size limit with tolerance to the expected size of STDOUT or STDERR.

- For more on remote tasks see Remote Task Hosting

- For more on task communications, see Tracking Task State.

- For more on suite passphrases and authentication, see Suite Passphrases and Client-Server Interaction.

7.19. Task Triggering¶

suite: tut/oneoff/goodbye

To make a second task called goodbye trigger after

hello finishes successfully, return to the original

example, tut/oneoff/basic, and change the suite graph

as in tut/oneoff/goodbye:

[scheduling]

[[dependencies]]

graph = "hello => goodbye"

or to trigger it at the same time as hello,

[scheduling]

[[dependencies]]

graph = "hello & goodbye"

and configure the new task’s behaviour under [runtime]:

[runtime]

[[goodbye]]

script = "sleep 10; echo Goodbye World!"

Run tut/oneoff/goodbye and check the output from the new task:

$ cat ~/cylc-run/tut/oneoff/goodbye/log/job/1/goodbye/01/job.out

# or

$ cylc cat-log -o tut/oneoff/goodbye goodbye.1

JOB SCRIPT STARTING

cylc (scheduler - 2014-08-14T15:09:30+12): goodbye.1 started at 2014-08-14T15:09:30+12

cylc Suite and Task Identity:

Suite Name : tut/oneoff/goodbye

Suite Host : oliverh-34403dl.niwa.local

Suite Port : 43001

Suite Owner : oliverh

Task ID : goodbye.1

Task Host : nwp-1

Task Owner : oliverh

Task Try No.: 1

Goodbye World!

cylc (scheduler - 2014-08-14T15:09:40+12): goodbye.1 succeeded at 2014-08-14T15:09:40+12

JOB SCRIPT EXITING (TASK SUCCEEDED)

7.19.1. Task Failure And Suicide Triggering¶

suite: tut/oneoff/suicide

Task names in the graph string can be qualified with a state indicator to trigger off task states other than success:

graph = """

a => b # trigger b if a succeeds

c:submit => d # trigger d if c submits

e:finish => f # trigger f if e succeeds or fails

g:start => h # trigger h if g starts executing

i:fail => j # trigger j if i fails

"""

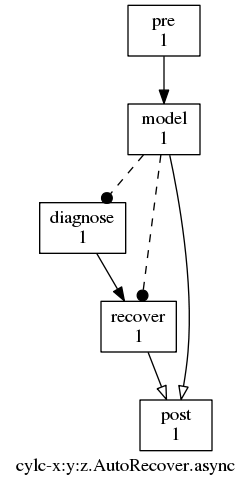

A common use of this is to automate recovery from known modes of failure:

graph = "goodbye:fail => really_goodbye"

i.e. if task goodbye fails, trigger another task that

(presumably) really says goodbye.

Failure triggering generally requires use of suicide triggers as well, to remove the recovery task if it isn’t required (otherwise it would hang about indefinitely in the waiting state):

[scheduling]

[[dependencies]]

graph = """hello => goodbye

goodbye:fail => really_goodbye

goodbye => !really_goodbye # suicide"""

This means if goodbye fails, trigger

really_goodbye; and otherwise, if goodbye

succeeds, remove really_goodbye from the suite.

Try running tut/oneoff/suicide, which also configures

the hello task’s runtime to make it fail, to see how this works.

- For more on suite dependency graphs see Scheduling - Dependency Graphs.

- For more on task triggering see Task Triggering.

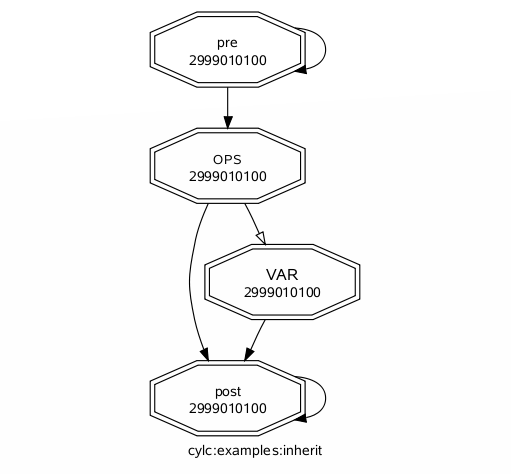

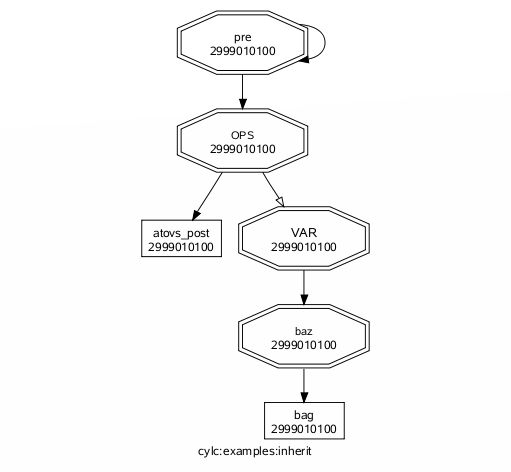

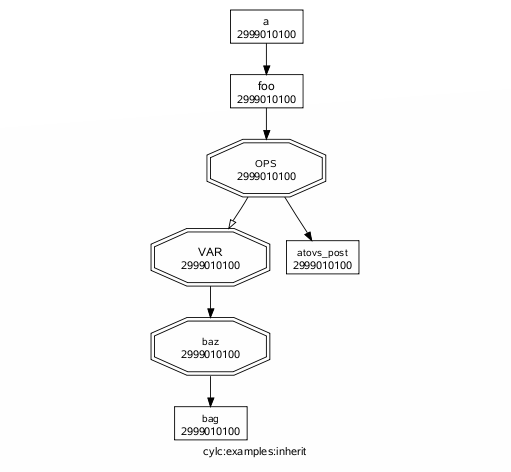

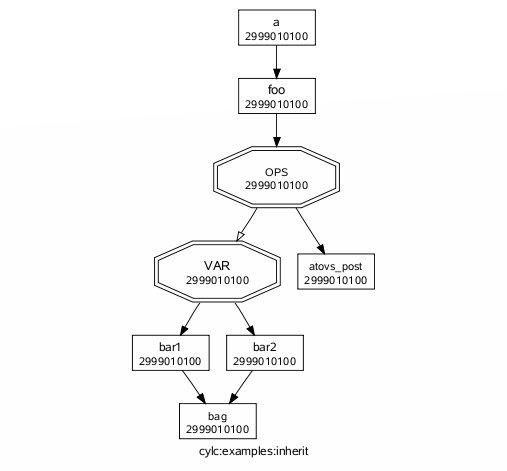

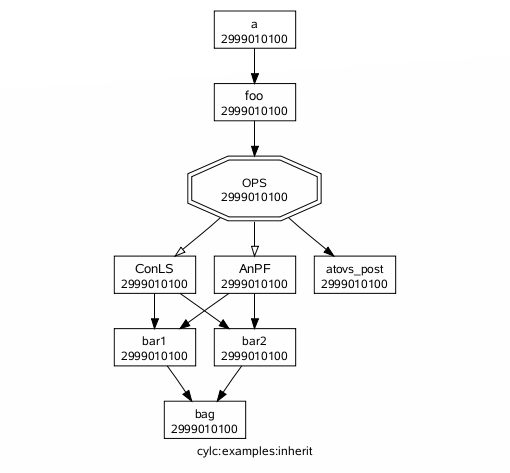

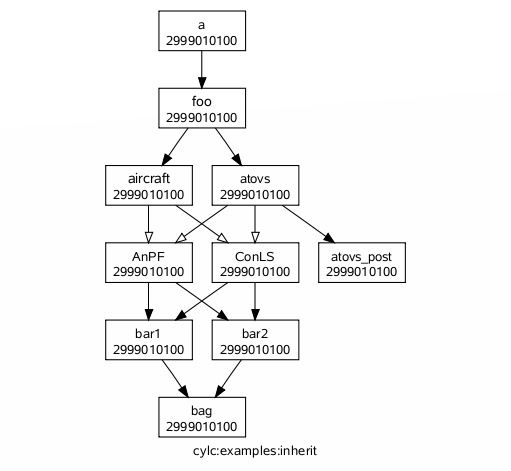

7.20. Runtime Inheritance¶

suite: tut/oneoff/inherit

The [runtime] section is actually a multiple inheritance hierarchy.

Each subsection is a namespace that represents a task, or if it is

inherited by other namespaces, a family. This allows common configuration

to be factored out of related tasks very efficiently.

[meta]

title = "Simple runtime inheritance example"

[scheduling]

[[dependencies]]

graph = "hello => goodbye"

[runtime]

[[root]]

script = "sleep 10; echo $GREETING World!"

[[hello]]

[[[environment]]]

GREETING = Hello

[[goodbye]]

[[[environment]]]

GREETING = Goodbye

The [root] namespace provides defaults for all tasks in the suite.

Here both tasks inherit script from root, which they

customize with different values of the environment variable

$GREETING.

Note

Inheritance from root is

implicit; from other parents an explicit inherit = PARENT

is required, as shown below.

- For more on runtime inheritance, see Runtime - Task Configuration.

7.21. Triggering Families¶

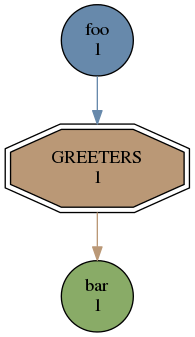

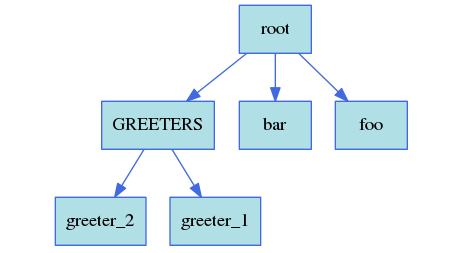

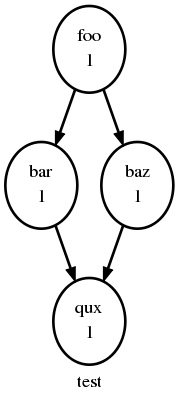

suite: tut/oneoff/ftrigger1

Task families defined by runtime inheritance can also be used as

shorthand in graph trigger expressions. To see this, consider two

“greeter” tasks that trigger off another task foo:

[scheduling]

[[dependencies]]

graph = "foo => greeter_1 & greeter_2"

If we put the common greeting functionality of greeter_1

and greeter_2 into a special GREETERS family,

the graph can be expressed more efficiently like this:

[scheduling]

[[dependencies]]

graph = "foo => GREETERS"

i.e. if foo succeeds, trigger all members of

GREETERS at once. Here’s the full suite with runtime

hierarchy shown:

[meta]

title = "Triggering a family of tasks"

[scheduling]

[[dependencies]]

graph = "foo => GREETERS"

[runtime]

[[root]]

pre-script = "sleep 10"

[[foo]]

# empty (creates a dummy task)

[[GREETERS]]

script = "echo $GREETING World!"

[[greeter_1]]

inherit = GREETERS

[[[environment]]]

GREETING = Hello

[[greeter_2]]

inherit = GREETERS

[[[environment]]]

GREETING = Goodbye

Note

We recommend given ALL-CAPS names to task families to help distinguish them from task names. However, this is just a convention.

Experiment with the tut/oneoff/ftrigger1 suite to see

how this works.

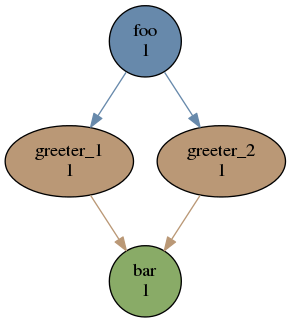

7.22. Triggering Off Of Families¶

suite: tut/oneoff/ftrigger2

Tasks (or families) can also trigger off other families, but

in this case we need to specify what the trigger means in terms of

the upstream family members. Here’s how to trigger another task

bar if all members of GREETERS succeed:

[scheduling]

[[dependencies]]

graph = """foo => GREETERS

GREETERS:succeed-all => bar"""

Verbose validation in this case reports:

$ cylc val -v tut/oneoff/ftrigger2

...

Graph line substitutions occurred:

IN: GREETERS:succeed-all => bar

OUT: greeter_1:succeed & greeter_2:succeed => bar

...

Cylc ignores family member qualifiers like succeed-all on

the right side of a trigger arrow, where they don’t make sense, to

allow the two graph lines above to be combined in simple cases:

[scheduling]

[[dependencies]]

graph = "foo => GREETERS:succeed-all => bar"

Any task triggering status qualified by -all or

-any, for the members, can be used with a family trigger.

For example, here’s how to trigger bar if all members

of GREETERS finish (succeed or fail) and any of them succeed:

[scheduling]

[[dependencies]]

graph = """foo => GREETERS

GREETERS:finish-all & GREETERS:succeed-any => bar"""

(use of GREETERS:succeed-any by itself here would trigger

bar as soon as any one member of GREETERS

completed successfully). Verbose validation now begins to show how

family triggers can simplify complex graphs, even for this tiny

two-member family:

$ cylc val -v tut/oneoff/ftrigger2

...

Graph line substitutions occurred:

IN: GREETERS:finish-all & GREETERS:succeed-any => bar

OUT: ( greeter_1:succeed | greeter_1:fail ) & \

( greeter_2:succeed | greeter_2:fail ) & \

( greeter_1:succeed | greeter_2:succeed ) => bar

...

Experiment with tut/oneoff/ftrigger2 to see how this works.

- For more on family triggering, see Family Triggers.

7.23. Suite Visualization¶

You can style dependency graphs with an optional

[visualization] section, as shown in tut/oneoff/ftrigger2:

[visualization]

default node attributes = "style=filled"

[[node attributes]]

foo = "fillcolor=#6789ab", "color=magenta"

GREETERS = "fillcolor=#ba9876"

bar = "fillcolor=#89ab67"

To display the graph in an interactive viewer:

$ cylc graph tut/oneoff/ftrigger2 & # dependency graph

$ cylc graph -n tut/oneoff/ftrigger2 & # runtime inheritance graph

It should look like Fig. 15 (with the

GREETERS family node expanded on the right).

Fig. 16 The tut/oneoff/ftrigger2 dependency and runtime inheritance graphs

Graph styling can be applied to entire families at once, and custom “node groups” can also be defined for non-family groups.

7.24. External Task Scripts¶

suite: tut/oneoff/external

The tasks in our examples so far have all had inlined implementation, in

the suite configuration, but real tasks often need to call external

commands, scripts, or executables. To try this, let’s return to the

basic Hello World suite and cut the implementation of the task

hello out to a file hello.sh in the suite bin directory:

#!/bin/sh

set -e

GREETING=${GREETING:-Goodbye}

echo "$GREETING World! from $0"

Make the task script executable, and change the hello task

runtime section to invoke it:

[meta]

title = "Hello World! from an external task script"

[scheduling]

[[dependencies]]

graph = "hello"

[runtime]

[[hello]]

pre-script = sleep 10

script = hello.sh

[[[environment]]]

GREETING = Hello

If you run the suite now the new greeting from the external task script

should appear in the hello task stdout log. This works

because cylc automatically adds the suite bin directory to

$PATH in the environment passed to tasks via their job

scripts. To execute scripts (etc.) located elsewhere you can

refer to the file by its full file path, or set $PATH

appropriately yourself (this could be done via

$HOME/.profile, which is sourced at the top of the task job

script, or in the suite configuration itself).

Note

The use of set -e above to make the script abort on

error. This allows the error trapping code in the task job script to

automatically detect unforeseen errors.

7.25. Cycling Tasks¶

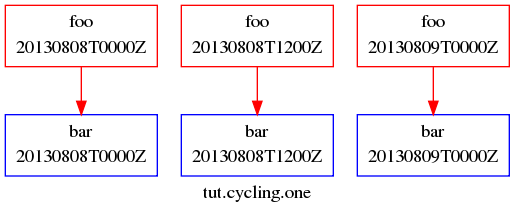

suite: tut/cycling/one

So far we’ve considered non-cycling tasks, which finish without spawning a successor.

Cycling is based around iterating through date-time or integer sequences. A cycling task may run at each cycle point in a given sequence (cycle). For example, a sequence might be a set of date-times every 6 hours starting from a particular date-time. A cycling task may run for each date-time item (cycle point) in that sequence.

There may be multiple instances of this type of task running in parallel, if the opportunity arises and their dependencies allow it. Alternatively, a sequence can be defined with only one valid cycle point - in that case, a task belonging to that sequence may only run once.

Open the tut/cycling/one suite:

[meta]

title = "Two cycling tasks, no inter-cycle dependence"

[cylc]

UTC mode = True

[scheduling]

initial cycle point = 20130808T00

final cycle point = 20130812T00

[[dependencies]]

[[[T00,T12]]] # 00 and 12 hours UTC every day

graph = "foo => bar"

[visualization]

initial cycle point = 20130808T00

final cycle point = 20130809T00

[[node attributes]]

foo = "color=red"

bar = "color=blue"

The difference between cycling and non-cycling suites is all in the

[scheduling] section, so we will leave the

[runtime] section alone for now (this will result in

cycling dummy tasks).

Note

The graph is now defined under a new section heading that makes each

task under it have a succession of cycle points ending in 00 or

12 hours, between specified initial and final cycle

points (or indefinitely if no final cycle point is given), as shown in

Fig. 17.

Fig. 17 The tut/cycling/one suite

If you run this suite instances of foo will spawn in parallel out

to the runahead limit, and each bar will trigger off the

corresponding instance of foo at the same cycle point. The

runahead limit, which defaults to a few cycles but is configurable, prevents

uncontrolled spawning of cycling tasks in suites that are not constrained by

clock triggers in real time operation.

Experiment with tut/cycling/one to see how cycling tasks work.

7.25.1. ISO 8601 Date-Time Syntax¶

The suite above is a very simple example of a cycling date-time workflow. More

generally, cylc comprehensively supports the ISO 8601 standard for date-time

instants, intervals, and sequences. Cycling graph sections can be specified

using full ISO 8601 recurrence expressions, but these may be simplified

by assuming context information from the suite - namely initial and final cycle

points. One form of the recurrence syntax looks like

Rn/start-date-time/period (Rn means run n times). In the example

above, if the initial cycle point

is always at 00 or 12 hours then [[[T00,T12]]] could be

written as [[[PT12H]]], which is short for

[[[R/initial-cycle-point/PT12H/]]] - i.e. run every 12 hours

indefinitely starting at the initial cycle point. It is possible to add

constraints to the suite to only allow initial cycle points at 00 or

12 hours e.g.

[scheduling]

initial cycle point = 20130808T00

initial cycle point constraints = T00, T12

- For a comprehensive description of ISO 8601 based date-time cycling, see Advanced Examples

- For more on runahead limiting in cycling suites, see Runahead Limiting.

7.25.2. Inter-Cycle Triggers¶

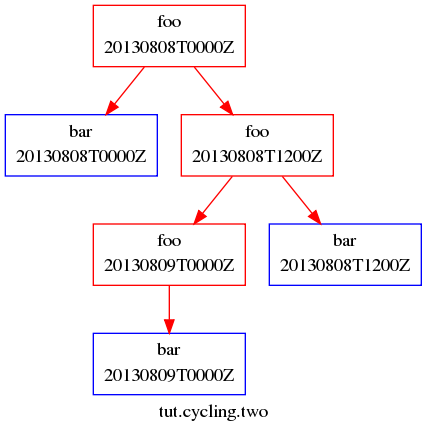

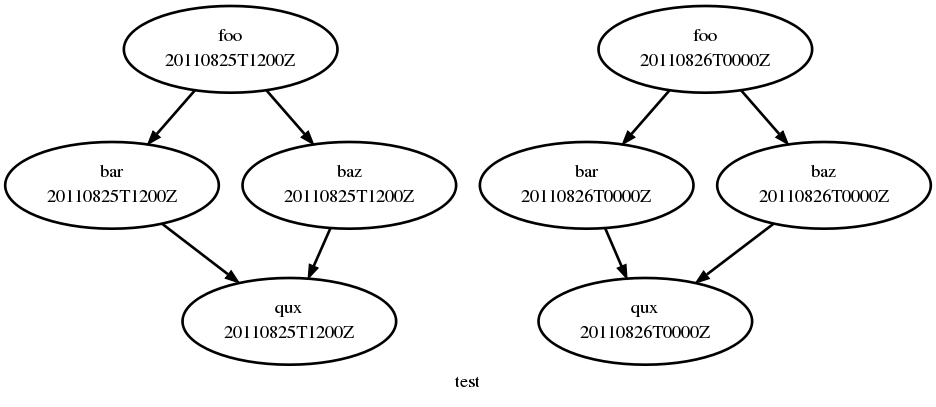

suite: tut/cycling/two

The tut/cycling/two suite adds inter-cycle dependence

to the previous example:

[scheduling]

[[dependencies]]

# Repeat with cycle points of 00 and 12 hours every day:

[[[T00,T12]]]

graph = "foo[-PT12H] => foo => bar"

For any given cycle point in the sequence defined by the

cycling graph section heading, bar triggers off

foo as before, but now foo triggers off its own

previous instance foo[-PT12H]. Date-time offsets in

inter-cycle triggers are expressed as ISO 8601 intervals (12 hours

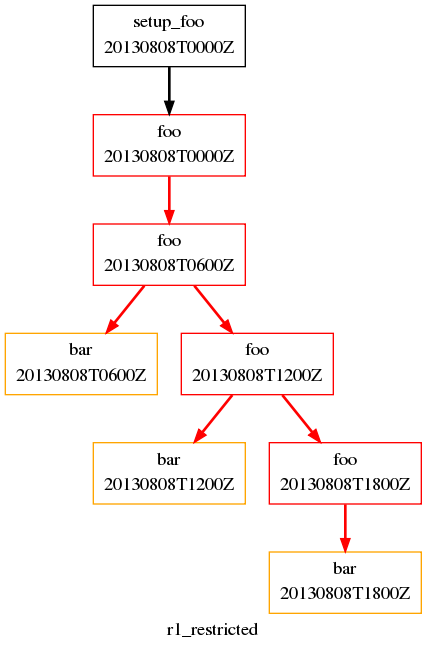

in this case). Fig. 18 shows how this connects the

cycling graph sections together.

Fig. 18 The tut/cycling/two suite

Experiment with this suite to see how inter-cycle triggers work.

Note

The first instance of foo, at suite start-up, will

trigger immediately in spite of its inter-cycle trigger, because cylc

ignores dependence on points earlier than the initial cycle point.

However, the presence of an inter-cycle trigger usually implies something

special has to happen at start-up. If a model depends on its own previous

instance for restart files, for example, then some special process has to

generate the initial set of restart files when there is no previous cycle

point to do it. The following section shows one way to handle this

in cylc suites.

7.25.3. Initial Non-Repeating (R1) Tasks¶

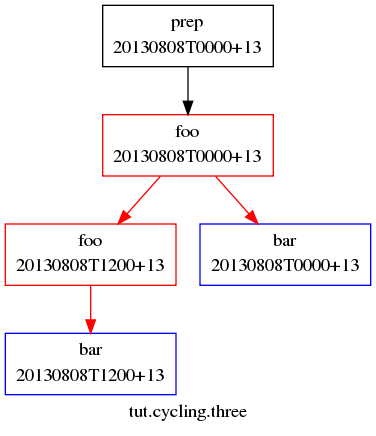

suite: tut/cycling/three

Sometimes we want to be able to run a task at the initial cycle point, but refrain from running it in subsequent cycles. We can do this by writing an extra set of dependencies that are only valid at a single date-time cycle point. If we choose this to be the initial cycle point, these will only apply at the very start of the suite.

The cylc syntax for writing this single date-time cycle point occurrence is

R1, which stands for R1/no-specified-date-time/no-specified-period.

This is an adaptation of part of the ISO 8601 date-time standard’s recurrence

syntax (Rn/date-time/period) with some special context information

supplied by cylc for the no-specified-* data.

The 1 in the R1 means run once. As we’ve specified

no date-time, Cylc will use the initial cycle point date-time by default,

which is what we want. We’ve also missed out specifying the period - this is

set by cylc to a zero amount of time in this case (as it never

repeats, this is not significant).

For example, in tut/cycling/three:

[cylc]

cycle point time zone = +13

[scheduling]

initial cycle point = 20130808T00

final cycle point = 20130812T00

[[dependencies]]

[[[R1]]]

graph = "prep => foo"

[[[T00,T12]]]

graph = "foo[-PT12H] => foo => bar"

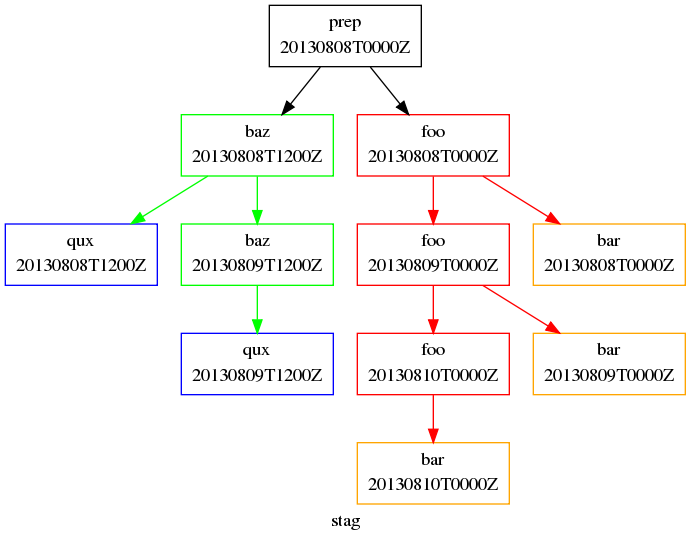

This is shown in Fig. 19.

Note

The time zone has been set to +1300 in this case,

instead of UTC (Z) as before. If no time zone or UTC mode was set,

the local time zone of your machine will be used in the cycle points.

At the initial cycle point, foo will depend on foo[-PT12H] and also

on prep:

prep.20130808T0000+13 & foo.20130807T1200+13 => foo.20130808T0000+13

Thereafter, it will just look like e.g.:

foo.20130808T0000+13 => foo.20130808T1200+13

However, in our initial cycle point example, the dependence on

foo.20130807T1200+13 will be ignored, because that task’s cycle

point is earlier than the suite’s initial cycle point and so it cannot run.

This means that the initial cycle point dependencies for foo

actually look like:

prep.20130808T0000+13 => foo.20130808T0000+13

Fig. 19 The tut/cycling/three suite

R1tasks can also be used to make something special happen at suite shutdown, or at any single cycle point throughout the suite run. For a full primer on cycling syntax, see Advanced Examples.

7.25.4. Integer Cycling¶

suite: tut/cycling/integer

Cylc can do also do integer cycling for repeating workflows that are not date-time based.

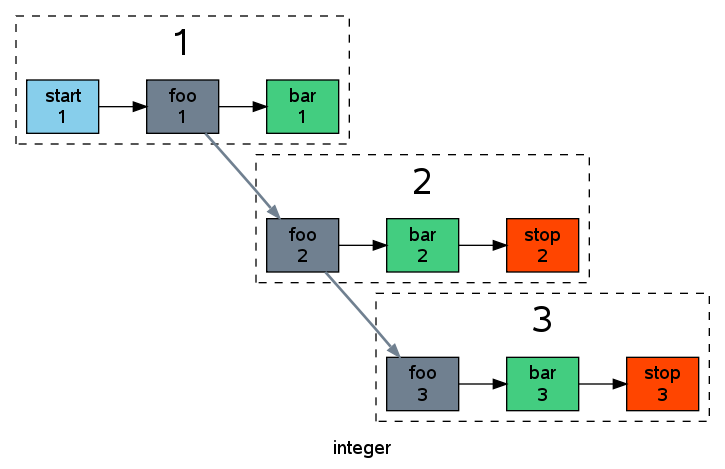

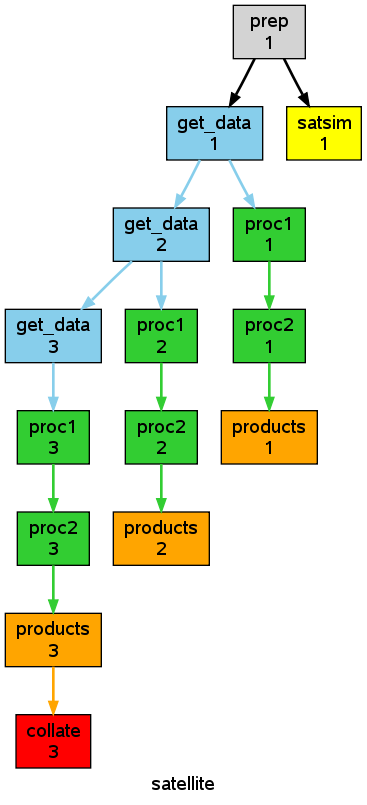

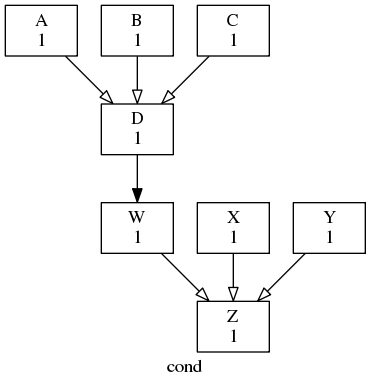

Open the tut/cycling/integer suite, which is plotted in

Fig. 20.

[scheduling]

cycling mode = integer

initial cycle point = 1

final cycle point = 3

[[dependencies]]

[[[R1]]] # = R1/1/?

graph = start => foo

[[[P1]]] # = R/1/P1

graph = foo[-P1] => foo => bar

[[[R2/P1]]] # = R2/P1/3

graph = bar => stop

[visualization]

[[node attributes]]

start = "style=filled", "fillcolor=skyblue"

foo = "style=filled", "fillcolor=slategray"

bar = "style=filled", "fillcolor=seagreen3"

stop = "style=filled", "fillcolor=orangered"

Fig. 20 The tut/cycling/integer suite

The integer cycling notation is intended to look similar to the ISO 8601

date-time notation, but it is simpler for obvious reasons. The example suite

illustrates two recurrence forms,

Rn/start-point/period and

Rn/period/stop-point, simplified somewhat using suite context

information (namely the initial and final cycle points). The first form is

used to run one special task called start at start-up, and for the

main cycling body of the suite; and the second form to run another special task

called stop in the final two cycles. The P character

denotes period (interval) just like in the date-time notation.

R/1/P2 would generate the sequence of points 1,3,5,....

- For more on integer cycling, including a more realistic usage example see Integer Cycling.

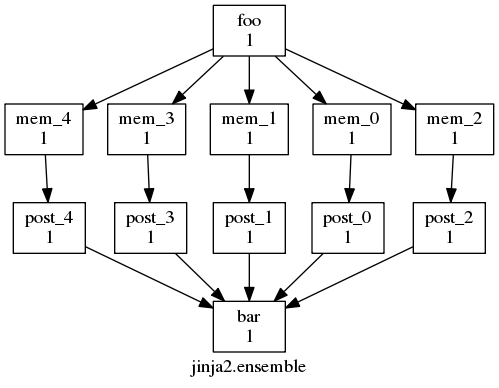

7.26. Jinja2¶

suite: tut/oneoff/jinja2

Cylc has built in support for the Jinja2 template processor, which allows us to embed code in suite configurations to generate the final result seen by cylc.

The tut/oneoff/jinja2 suite illustrates two common

uses of Jinja2: changing suite content or structure based on the value

of a logical switch; and iteratively generating dependencies and runtime

configuration for groups of related tasks:

#!jinja2

{% set MULTI = True %}

{% set N_GOODBYES = 3 %}

[meta]

title = "A Jinja2 Hello World! suite"

[scheduling]

[[dependencies]]

{% if MULTI %}

graph = "hello => BYE"

{% else %}

graph = "hello"

{% endif %}

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

{% if MULTI %}

[[BYE]]

script = "sleep 10; echo Goodbye World!"

{% for I in range(0,N_GOODBYES) %}

[[ goodbye_{{I}} ]]

inherit = BYE

{% endfor %}

{% endif %}

To view the result of Jinja2 processing with the Jinja2 flag

MULTI set to False:

$ cylc view --jinja2 --stdout tut/oneoff/jinja2

[meta]

title = "A Jinja2 Hello World! suite"

[scheduling]

[[dependencies]]

graph = "hello"

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

And with MULTI set to True:

$ cylc view --jinja2 --stdout tut/oneoff/jinja2

[meta]

title = "A Jinja2 Hello World! suite"

[scheduling]

[[dependencies]]

graph = "hello => BYE"

[runtime]

[[hello]]

script = "sleep 10; echo Hello World!"

[[BYE]]

script = "sleep 10; echo Goodbye World!"

[[ goodbye_0 ]]

inherit = BYE

[[ goodbye_1 ]]

inherit = BYE

[[ goodbye_2 ]]

inherit = BYE

7.27. Task Retry On Failure¶

suite: tut/oneoff/retry

Tasks can be configured to retry a number of times if they fail.

An environment variable $CYLC_TASK_TRY_NUMBER increments

from 1 on each successive try, and is passed to the task to allow

different behaviour on the retry:

[meta]

title = "A task with automatic retry on failure"

[scheduling]

[[dependencies]]

graph = "hello"

[runtime]

[[hello]]

script = """

sleep 10

if [[ $CYLC_TASK_TRY_NUMBER < 3 ]]; then

echo "Hello ... aborting!"

exit 1

else

echo "Hello World!"

fi"""

[[[job]]]

execution retry delays = 2*PT6S # retry twice after 6-second delays

If a task with configured retries fails, it goes into the retrying state until the next retry delay is up, then it resubmits. It only enters the failed state on a final definitive failure.

If a task with configured retries is killed (by cylc kill or

via the GUI) it goes to the held state so that the operator can decide

whether to release it and continue the retry sequence or to abort the retry

sequence by manually resetting it to the failed state.

Experiment with tut/oneoff/retry to see how this works.

7.28. Other Users’ Suites¶

If you have read access to another user’s account (even on another host)

it is possible to use cylc monitor to look at their suite’s

progress without full shell access to their account. To do this, you

will need to copy their suite passphrase to

$HOME/.cylc/SUITE_OWNER@SUITE_HOST/SUITE_NAME/passphrase

(use of the host and owner names is optional here - see Full Control - With Auth Files) and also retrieve the port number of the running suite from:

~SUITE_OWNER/cylc-run/SUITE_NAME/.service/contact

Once you have this information, you can run

$ cylc monitor --user=SUITE_OWNER --port=SUITE_PORT SUITE_NAME

to view the progress of their suite.

Other suite-connecting commands work in the same way; see Remote Control.

7.29. Other Things To Try¶

Almost every feature of cylc can be tested quickly and easily with a

simple dummy suite. You can write your own, or start from one of the

example suites in /path/to/cylc/examples (see use of

cylc import-examples above) - they all run “out the box”

and can be copied and modified at will.

- Change the suite runahead limit in a cycling suite.

- Stop a suite mid-run with

cylc stop, and restart it again withcylc restart. - Hold (pause) a suite mid-run with

cylc hold, then modify the suite configuration andcylc reloadit before usingcylc releaseto continue (you can also reload without holding). - Use the gcylc View menu to show the task state color key and

watch tasks in the

task-statesexample evolve as the suite runs. - Manually re-run a task that has already completed or failed,

with

cylc trigger. - Use an internal queue to prevent more than an alotted number of tasks from running at once even though they are ready - see Limiting Activity With Internal Queues.

- Configure task event hooks to send an email, or shut the suite down, on task failure.

8. Suite Name Registration¶

8.1. Suite Registration¶

Cylc commands target suites via their names, which are relative path names

under the suite run directory (~/cylc-run/ by default). Suites can

be grouped together under sub-directories. E.g.:

$ cylc print -t nwp

nwp

|-oper

| |-region1 Local Model Region1 /home/oliverh/cylc-run/nwp/oper/region1

| `-region2 Local Model Region2 /home/oliverh/cylc-run/nwp/oper/region2

`-test

`-region1 Local Model TEST Region1 /home/oliverh/cylc-run/nwp/test/region1

Suite names can be pre-registered with the cylc register command,

which creates the suite run directory structure and some service files

underneath it. Otherwise, cylc run will do this at suite start up.

8.2. Suite Names¶

Suite names are not validated. Names for suites can be anything that is a valid filename within your operating system’s file system, which includes restrictions on name length (as described under Task and Namespace Names), with the exceptions of:

/, which is not supported for general filenames on e.g. Linux systems but is allowed for suite names to generate hierarchical suites (see register);- while possible in filenames on many systems, it is strongly advised that

suite names do not contain any whitespace characters (e.g. as

in

my suite).

9. Suite Configuration¶

Cylc suites are defined in structured, validated, suite.rc files that concisely specify the properties of, and the relationships between, the various tasks managed by the suite. This section of the User Guide deals with the format and content of the suite.rc file, including task definition. Task implementation - what’s required of the real commands, scripts, or programs that do the processing that the tasks represent - is covered in Task Implementation; and task job submission - how tasks are submitted to run - is in Task Job Submission and Management.

9.1. Suite Configuration Directories¶

A cylc suite configuration directory contains:

- A suite.rc file: this is the suite configuration.

- And any include-files used in it (see below; may be kept in sub-directories).

- A

bin/sub-directory (optional)- For scripts and executables that implement, or are used by, suite tasks.

- Automatically added to

$PATHin task execution environments. - Alternatively, tasks can call external commands, scripts, or programs; or they can be scripted entirely within the suite.rc file.

- A

lib/python/sub-directory (optional)- For custom job submission modules (see Custom Job Submission Methods) and local Python modules imported by custom Jinja2 filters, tests and globals (see Custom Jinja2 Filters, Tests and Globals).

- Any other sub-directories and files - documentation,

control files, etc. (optional)

- Holding everything in one place makes proper suite revision control possible.

- Portable access to files here, for running tasks, is

provided through

$CYLC_SUITE_DEF_PATH(see Task Execution Environment). - Ignored by cylc, but the entire suite configuration directory tree is copied when you copy a suite using cylc commands.

A typical example:

/path/to/my/suite # suite configuration directory

suite.rc # THE SUITE CONFIGURATION FILE

bin/ # scripts and executables used by tasks