16.3. Basic Principles¶

This section covers general principles that should be kept in mind when writing any suite. More advanced topics are covered later: Efficiency And Maintainability and Portable Suites.

16.3.1. UTC Mode¶

Cylc has full timezone support if needed, but real time NWP suites should use UTC mode to avoid problems at the transition between local standard time and daylight saving time, and to enable the same suite to run the same way in different timezones.

[cylc]

UTC mode = True

16.3.2. Fine Or Coarse-Grained Suites¶

Suites can have many small simple tasks, fewer large complex tasks, or anything in between. A task that runs many distinct processes can be split into many distinct tasks. The fine-grained approach is more transparent and it allows more task level concurrency and quicker failure recovery - you can rerun just what failed without repeating anything unnecessarily.

16.3.2.1. rose bunch¶

One caveat to our fine-graining advice is that submitting a large number of

small tasks at once may be a problem on some platforms. If you have many

similar concurrent jobs you can use rose bunch to pack them into a

single task with incremental rerun capability: retriggering the task will rerun

just the component jobs that did not successfully complete earlier.

16.3.3. Monolithic Or Interdependent Suites¶

When writing suites from scratch you may need to decide between putting multiple loosely connected sub-workflows into a single large suite, or constructing a more modular system of smaller suites that depend on each other through inter-suite triggering. Each approach has its pros and cons, depending on your requirements and preferences with respect to the complexity and manageability of the resulting system.

The cylc gscan GUI lets you monitor multiple suites at a time, and

you can define virtual groups of suites that collapse into a single state

summary.

16.3.3.1. Inter-Suite Triggering¶

A task in one suite can explicitly trigger off of a task in another suite. The full range of possible triggering conditions is supported, including custom message triggers. Remote triggering involves repeatedly querying (“polling”) the remote suite run database, not the suite server program, so it works even if the other suite is down at the time.

There is special graph syntax to support triggering off of a task in another

suite, or you can call the underlying cylc suite-state command

directly in task scripting.

In real time suites you may want to use clock-triggers to delay the onset of inter-suite polling until roughly the expected completion time of the remote task.

16.3.4. Self-Contained Suites¶

All files generated by Cylc during a suite run are confined to the suite

run directory $HOME/cylc-run/<SUITE>. However, Cylc has no control

over the locations of the programs, scripts, and files, that are executed,

read, or generated by your tasks at runtime. It is up to you to ensure that

all of this is confined to the suite run directory too, as far as possible.

Self-contained suites are more robust, easier to work with, and more portable. Multiple instances of the same suite (with different suite names) should be able to run concurrently under the same user account without mutual interference.

16.3.4.1. Avoiding External Files¶

Suites that use external scripts, executables, and files beyond the essential system libraries and utilities are vulnerable to external changes: someone else might interfere with these files without telling you.

In some case you may need to symlink to large external files anyway, if space or copy speed is a problem, but otherwise suites with private copies of all the files they need are more robust.

16.3.4.2. Installing Files At Start-up¶

Use rose suite-run file creation mode or R1

install tasks to copy files to the self-contained suite run directory at

start-up. Install tasks are preferred for time-consuming installations because

they don’t slow the suite start-up process, they can be monitored in the GUI,

they can run directly on target platforms, and you can rerun them later without

restarting the suite. If you are using symbolic links to install files under

your suite directory it is recommended that the linking should be set up to

fail if the source is missing e.g. by using mode=symlink+ for file

installation in a rose app.

16.3.4.3. Confining Ouput To The Run Directory¶

Output files should be confined to the suite run directory tree. Then all

output is easy to find, multiple instances of the same suite can run

concurrently without interference, and other users should be able to copy and

run your suite with few modifications. Cylc provides a share

directory for generated files that are used by several tasks in a suite

(see Shared Task IO Paths). Archiving tasks can use rose arch

to copy or move selected files to external locations as needed (see

Suite Housekeeping).

16.3.5. Task Host Selection¶

At sites with multiple task hosts to choose from, use

rose host-select to dynamically select appropriate task hosts

rather than hard coding particular hostnames. This enables your suite to

adapt to particular machines being down or heavily overloaded by selecting

from a group of hosts based on a series of criteria.

rose host-select will only return hosts that can be contacted by

non-interactive SSH.

16.3.6. Task Scripting¶

Non-trivial task scripting should be held in external files rather than inlined in the suite.rc. This keeps the suite definition tidy, and it allows proper shell-mode text editing and independent testing of task scripts.

For automatic access by task jobs, task-specific scripts should be kept in Rose app bin directories, and shared scripts kept in (or installed to) the suite bin directory.

16.3.6.1. Coding Standards¶

When writing your own task scripts make consistent use of appropriate coding standards such as:

16.3.6.2. Basic Functionality¶

In consideration of future users who may not be expert on the internals of your suite and its tasks, all task scripts should:

- Print clear usage information if invoked incorrectly (and via the

standard options

-h, --help). - Print useful diagnostic messages in case of error. For example, if a file was not found, the error message should contain the full path to the expected location.

- Always return correct shell exit status - zero for success, non-zero for failure. This is used by Cylc job wrapper code to detect success and failure and report it back to the suite server program.

- In shell scripts use

set -uto abort on any reference to an undefined variable. If you really need an undefined variable to evaluate to an empty string, make it explicit:FOO=${FOO:-}. - In shell scripts use

set -eto abort on any error without having to failure-check each command explicitly. - In shell scripts use

set -o pipefailto abort on any error within a pipe line. Note that all commands in the pipe line will still run, it will just exit with the right most non-zero exit status.

Note

Examples and more details are available

for the above three set commands.

16.3.7. Rose Apps¶

Rose apps allow all non-shared task configuration - which is not relevant to

workflow automation - to be moved from the suite definition into app config

files. This makes suites tidier and easier to understand, and it allows

rose edit to provide a unified metadata-enhanced view of the suite

and its apps (see Rose Metadata Compliance).

Rose apps are a clear winner for tasks with complex configuration requirements.

It matters less for those with little configuration, but for consistency and to

take full advantage of rose edit it makes sense to use Rose apps

for most tasks.

When most tasks are Rose apps, set the app-run command as a root-level default, and override it for the occasional non Rose app task:

[runtime]

[[root]]

script = rose task-run -v

[[rose-app1]]

#...

[[rose-app2]]

#...

[[hello-world]] # Not a Rose app.

script = echo "Hello World"

16.3.8. Rose Metadata Compliance¶

Rose metadata drives page layout and sort order in rose edit, plus

help information, input validity checking, macros for advanced checking and app

version upgrades, and more.

To ensure the suite and its constituent applications are being run as intended

it should be valid against any provided metadata: launch the

rose edit GUI or run rose macro --validate on the

command line to highlight any errors, and correct them prior to use. If errors

are flagged incorrectly you should endeavour to fix the metadata.

When writing a new suite or application, consider creating metadata to facilitate ease of use by others.

16.3.9. Task Independence¶

Essential dependencies must be encoded in the suite graph, but tasks should not rely unnecessarily on the action of other tasks. For example, tasks should create their own output directories if they don’t already exist, even if they would normally be created by an earlier task in the workflow. This makes it is easier to run tasks alone during development and testing.

16.3.10. Clock-Triggered Tasks¶

Tasks that wait on real time data should use clock-triggers to delay job submission until the expected data arrival time:

[scheduling]

initial cycle point = now

[[special tasks]]

# Trigger 5 min after wall-clock time is equal to cycle point.

clock-trigger = get-data(PT5M)

[[dependencies]]

[[[T00]]]

graph = get-data => process-data

Clock-triggered tasks typically have to handle late data arrival. Task execution retry delays can be used to simply retrigger the task at intervals until the data is found, but frequently retrying small tasks probably should not go to a batch scheduler, and multiple task failures will be logged for what is a essentially a normal condition (at least it is normal until the data is really late).

Rather than using task execution retry delays to repeatedly trigger a task that checks for a file, it may be better to have the task itself repeatedly poll for the data (see Rose App File Polling for example).

16.3.11. Rose App File Polling¶

Rose apps have built-in polling functionality to check repeatedly for the

existence of files before executing the main app. See the [poll]

section in Rose app config documentation. This is a good way to implement

check-and-wait functionality in clock-triggered tasks

(Clock-Triggered Tasks), for example.

It is important to note that frequent polling may be bad for some filesystems, so be sure to configure a reasonable interval between polls.

16.3.12. Task Execution Time Limits¶

Instead of setting job wall clock limits directly in batch scheduler

directives, use the execution time limit suite config item.

Cylc automatically derives the correct batch scheduler directives from this,

and it is also used to run background and at jobs via

the timeout command, and to poll tasks that haven’t reported in

finished by the configured time limit.

16.3.13. Restricting Suite Activity¶

It may be possible for large suites to overwhelm a job host by submitting too many jobs at once:

- Large suites that are not sufficiently limited by real time clock triggering or inter-cycle dependence may generate a lot of runahead (this refers to Cylc’s ability to run multiple cycles at once, restricted only by the dependencies of individual tasks).

- Some suites may have large families of tasks whose members all become ready at the same time.

These problems can be avoided with runahead limiting and internal queues, respectively.

16.3.13.1. Runahead Limiting¶

By default Cylc allows a maximum of three cycle points to be active at the same time, but this value is configurable:

[scheduling]

initial cycle point = 2020-01-01T00

# Don't allow any cycle interleaving:

max active cycle points = 1

16.3.13.2. Internal Queues¶

Tasks can be assigned to named internal queues that limit the number of members that can be active (i.e. submitted or running) at the same time:

[scheduling]

initial cycle point = 2020-01-01T00

[[queues]]

# Allow only 2 members of BIG_JOBS to run at once:

[[[big_jobs_queue]]]

limit = 2

members = BIG_JOBS

[[dependencies]]

[[[T00]]]

graph = pre => BIG_JOBS

[runtime]

[[BIG_JOBS]]

[[foo, bar, baz, ...]]

inherit = BIG_JOBS

16.3.14. Suite Housekeeping¶

Ongoing cycling suites can generate an enormous number of output files and logs so regular housekeeping is very important. Special housekeeping tasks, typically the last tasks in each cycle, should be included to archive selected important files and then delete everything at some offset from the current cycle point.

The Rose built-in apps rose_arch and rose_prune

provide an easy way to do this. They can be configured easily with

file-matching patterns and cycle point offsets to perform various housekeeping

operations on matched files.

16.3.15. Complex Jinja2 Code¶

The Jinja2 template processor provides general programming constructs, extensible with custom Python filters, that can be used to generate the suite definition. This makes it possible to write flexible multi-use suites with structure and content that varies according to various input switches. There is a cost to this flexibility however: excessive use of Jinja2 can make a suite hard to understand and maintain. It is difficult to say exactly where to draw the line, but we recommend erring on the side of simplicity and clarity: write suites that are easy to understand and therefore easy to modify for other purposes, rather than extremely complicated suites that attempt do everything out of the box but are hard to maintain and modify.

Note that use of Jinja2 loops for generating tasks is now deprecated in favour of built-in parameterized tasks - see Parameterized Tasks.

16.3.17. Automating Failure Recovery¶

16.3.17.1. Job Submission Retries¶

When submitting jobs to a remote host, use job submission retries to automatically resubmit tasks in the event of network outages. Note this is distinct from job retries for job execution failure (just below).

Job submission retries should normally be host (or host-group for

rose host-select) specific, not task-specific, so configure them in

a host (or host-group) specific family. The following suite.rc fragment

configures all HPC jobs to retry on job submission failure up to 10

times at 1 minute intervals, then another 5 times at 1 hour intervals:

[runtime]

[[HPC]] # Inherited by all jobs submitted to HPC.

[[[job]]]

submission retry delays = 10*PT1M, 5*PT1H

16.3.17.2. Job Execution Retries¶

Automatic retry on job execution failure is useful if you have good reason to believe that a simple retry will usually succeed. This may be the case if the job host is known to be flaky, or if the job only ever fails for one known reason that can be fixed on a retry. For example, if a model fails occasionally with a numerical instability that can be remedied with a short timestep rerun, then an automatic retry may be appropriate:

[runtime]

[[model]]

script = """

if [[ $CYLC_TASK_TRY_NUMBER > 1 ]]; then

SHORT_TIMESTEP=true

else

SHORT_TIMESTEP=false

fi

model.exe"""

[[[job]]]

execution retry delays = 1*PT0M

16.3.17.3. Failure Recovery Workflows¶

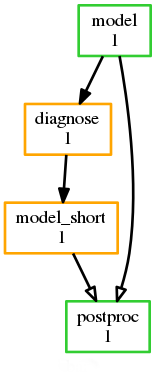

For recovery from failures that require explicit diagnosis you can configure alternate routes through the workflow, together with suicide triggers that remove the unused route. In the following example, if the model fails a diagnosis task will trigger; if it determines the cause of the failure is a known numerical instability (e.g. by parsing model job logs) it will succeed, triggering a short timestep run. Postprocessing can proceed from either the original or the short-step model run, and suicide triggers remove the unused path from the workflow:

[scheduling]

[[dependencies]]

graph = """

model | model_short => postproc

model:fail => diagnose => model_short

# Clean up with suicide triggers:

model => ! diagnose & ! model_short

model_short => ! model"""

16.3.18. Include Files¶

Include-files should not be overused, but they can sometimes be useful (e.g. see Portable Suites):

#...

{% include 'inc/foo.rc' %}

(Technically this inserts a Jinja2-rendered file template). Cylc also has a native include mechanism that pre-dates Jinja2 support and literally inlines the include-file:

#...

%include 'inc/foo.rc'

The two methods normally produce the same result, but use the Jinja2 version if you need to construct an include-file name from a variable (because Cylc include-files get inlined before Jinja2 processing is done):

#...

{% include 'inc/' ~ SITE ~ '.rc' %}